Predictive Checks of Statistical Models for SCD Data

An Old and Under-Appreciated Method for Assessing Whether a Model is Any Good

2025-05-15

Session overview

Collective brain dump (15 minutes)

Predictive checks for statistical models of single-case data (30-45 minutes)

Small- and large-group discussion (30-45 minutes)

Brain-dump questions

Editor perspective: What common problems are you seeing in manuscript review with respect to statistical analysis and reporting? Have you noticed any improvements in application of statistical analysis?

Researcher perspective: What challenges or limitations are you running into in applying statistical analysis that you think could potentially enhance your work?

Student perspective: Have you seen any applications of statistical analysis that you found especially compelling? Have you learned about any statistical methodology work that you find exciting or compelling?

Predictive Checks of Statistical Models for SCD Data

An Old and Under-Appreciated Method for Assessing Whether a Model is Any Good

Why engage in statistical analysis of single-case data?

Recent developments in models for SCD data are getting more complex and technical

We need tools for evaluating the

plausibility and credibility

of statistical analyses—even when

based on complex statistical models.

What is a parametric statistical model?

A succinct, highly stylized description of the process of collecting data.

A mathematical story about where you got your data.

A model describes not just the data you obtained, but also other possible outcomes of the study.

- This is what lets us make statements about uncertainty in parameter estimates and inferences.

- A credible parametric model should tell believable, realistic stories.

Predictive Checks

Predictive Checks are a general technique for evaluating the fit of a parametric statistical model by examining other possible data generated from the model.

Well-known part of Bayesian model development process (Berkhof, Mechelen, and Hoijtink 2000; Gelman et al. 2020; Sinharay and Stern 2003)

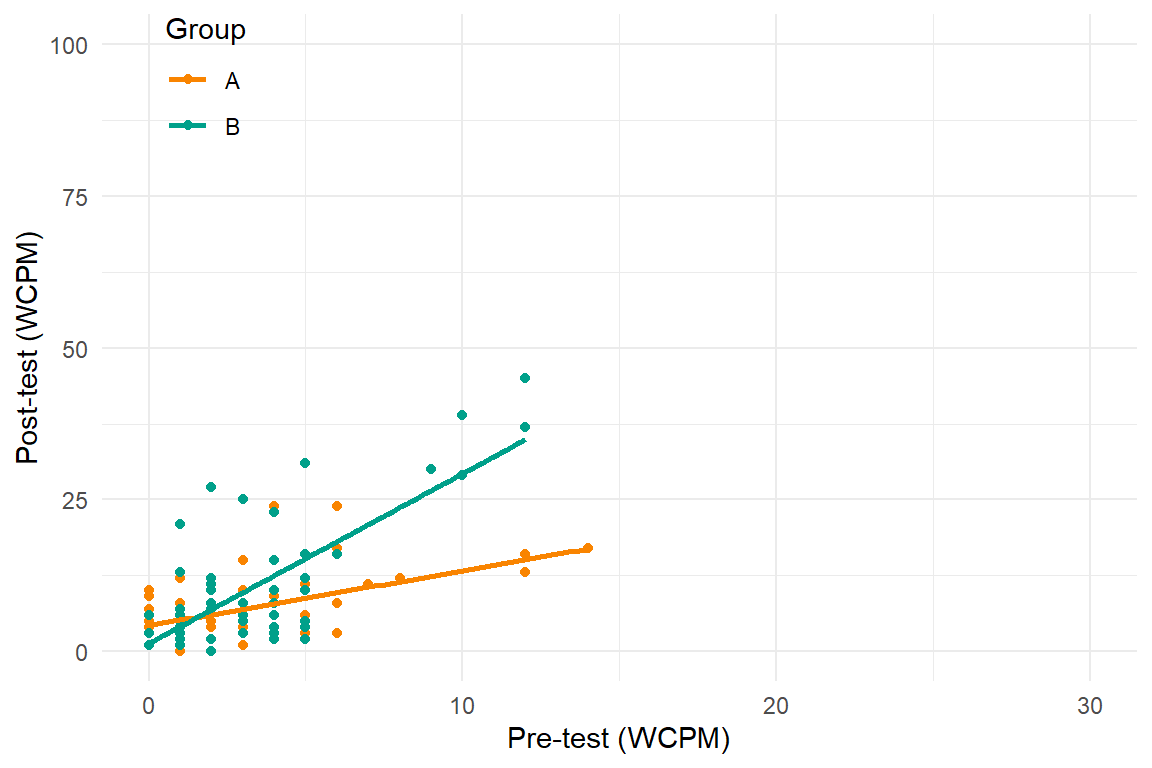

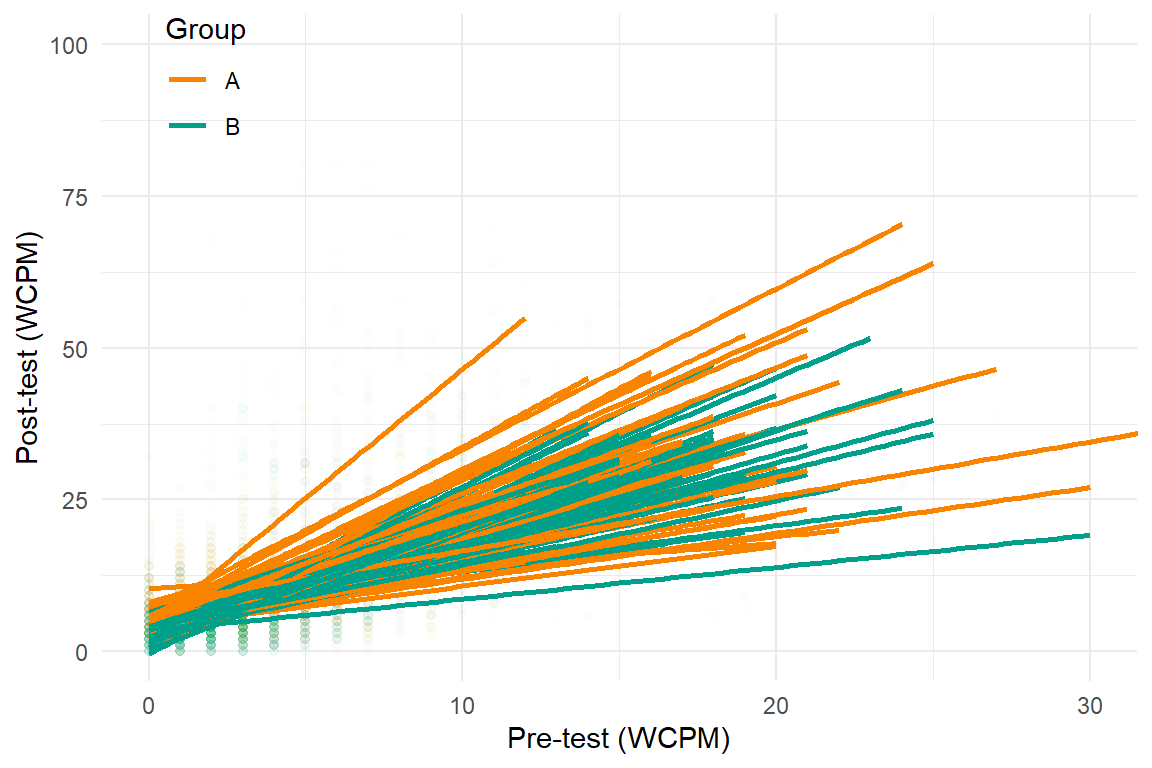

Grekov, Pustejovsky, and Klingbeil (2024) explore use predictive checks for meta-analytic models of oral reading fluency outcomes in SCDs.

- I will demonstrate predictive checking of a Bayesian multilevel model estimated using Markov Chain Monte Carlo.

Predictive Checking Workflow

Fit a statistical model.

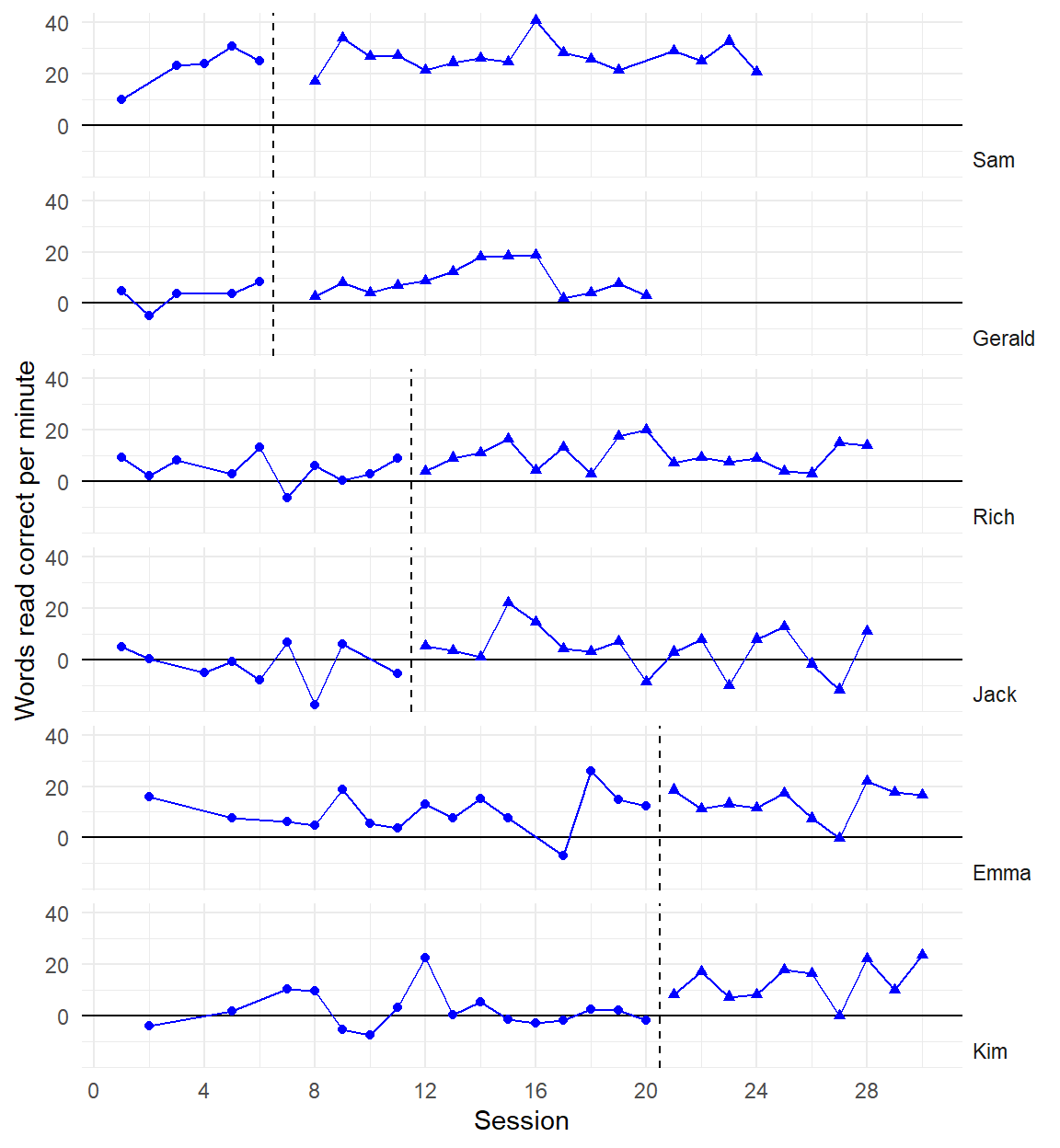

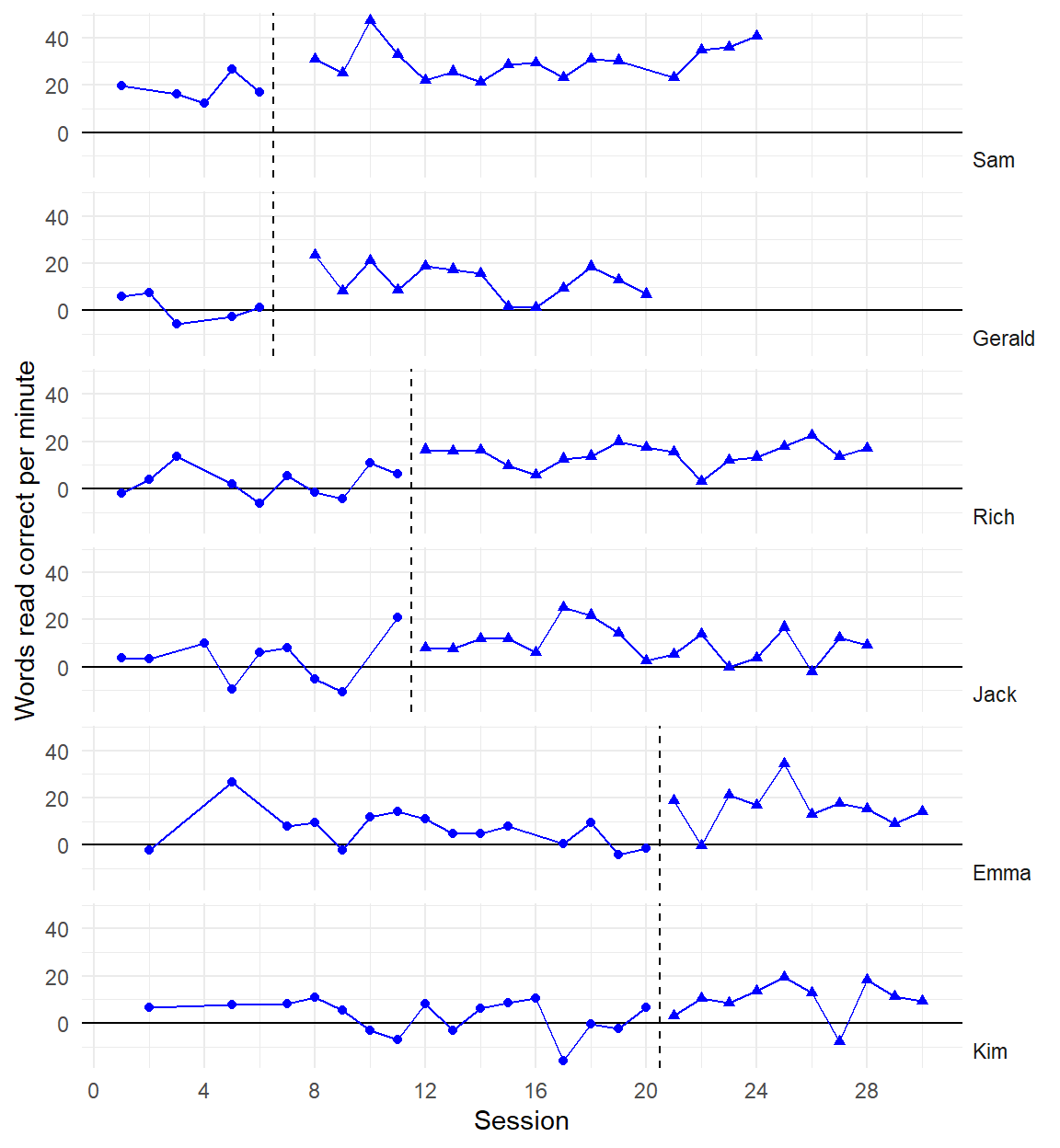

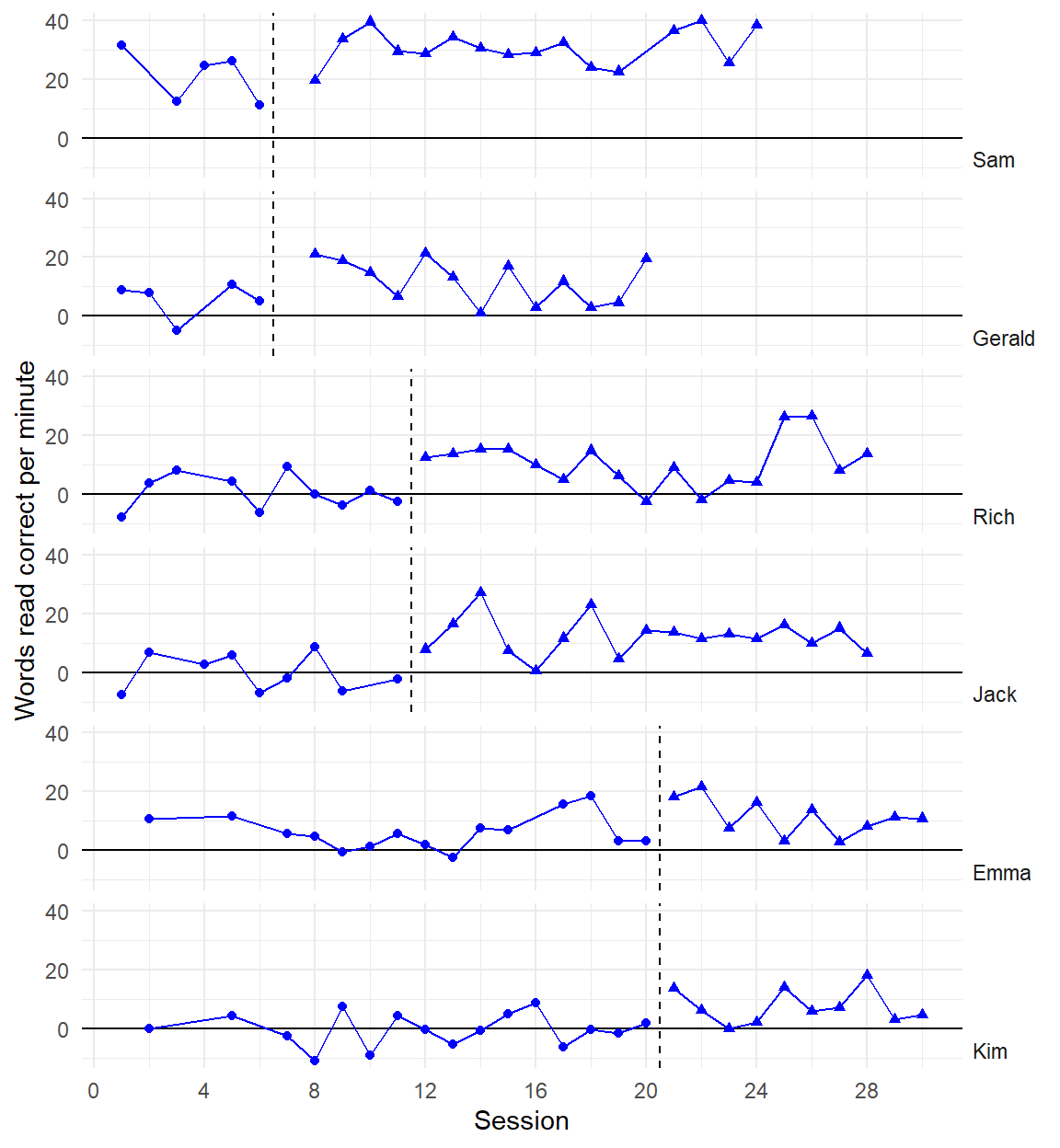

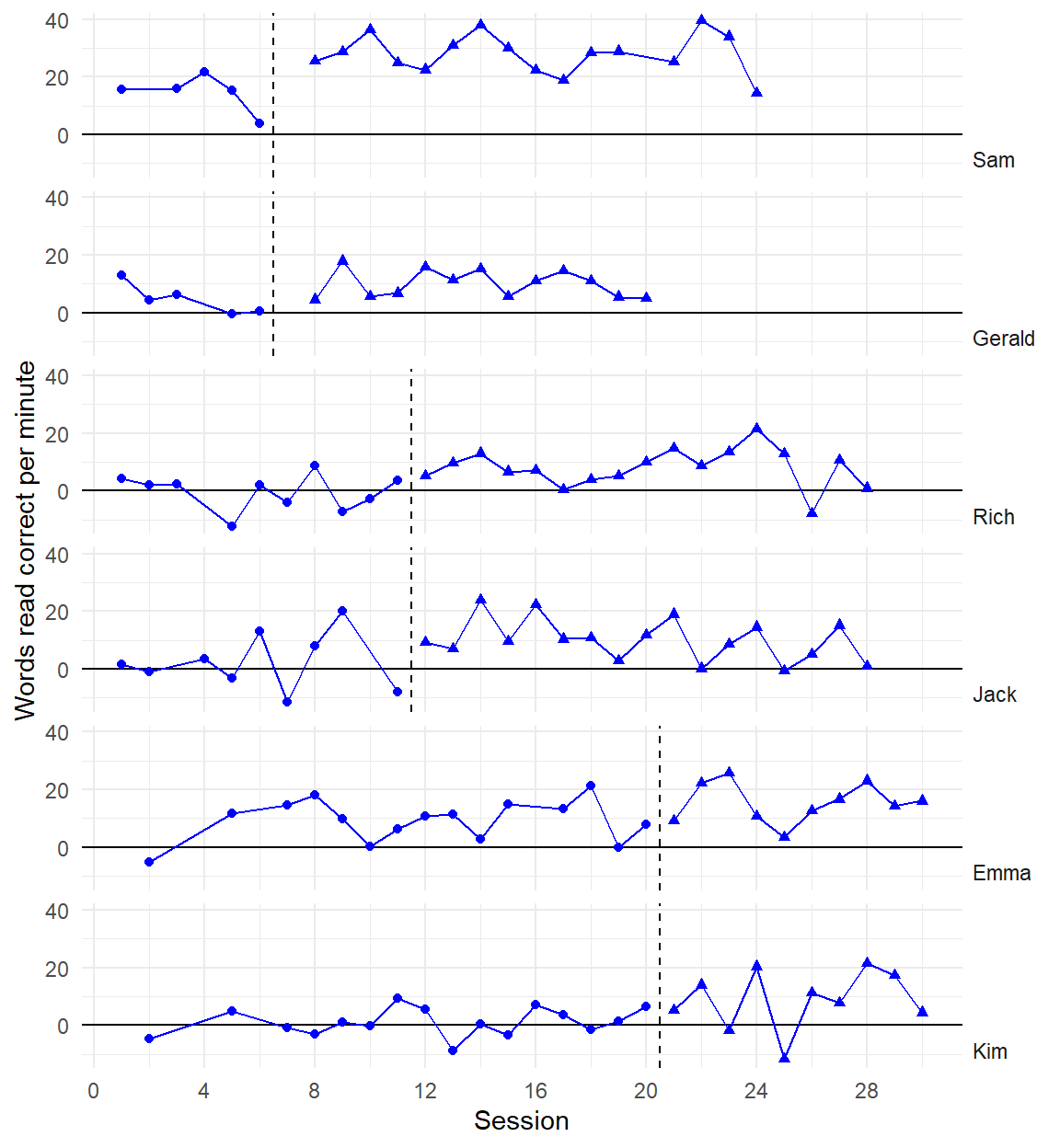

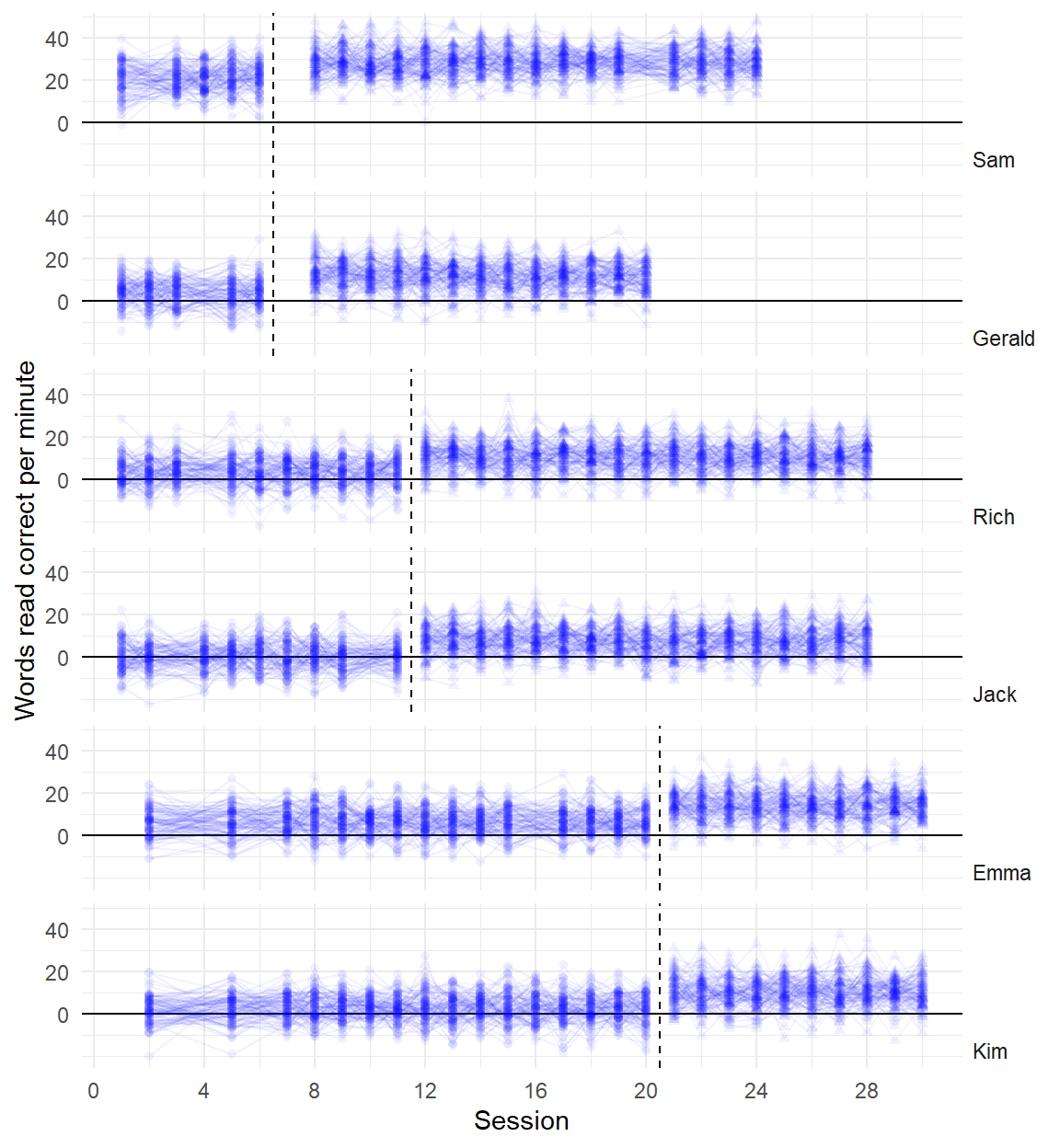

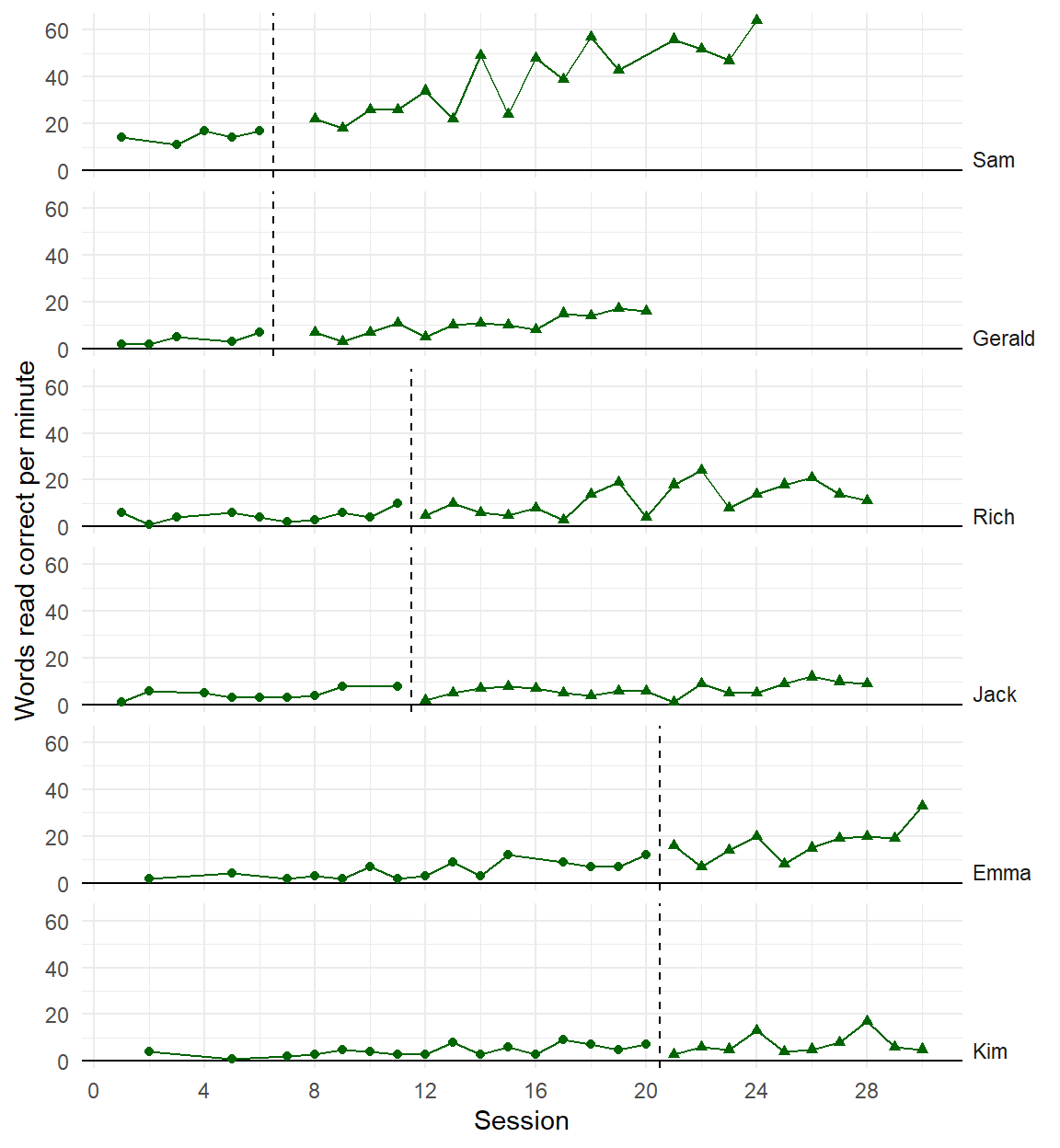

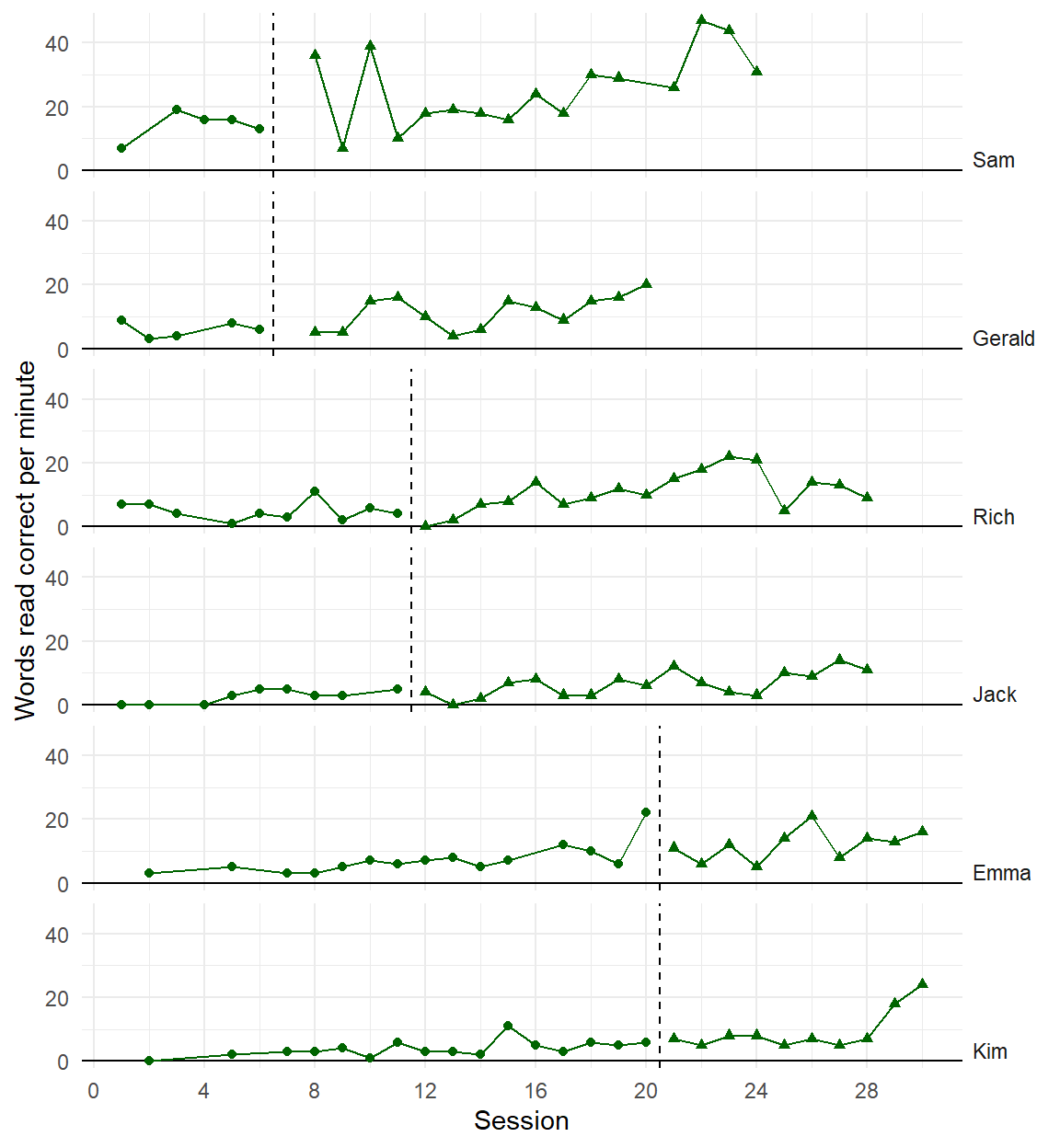

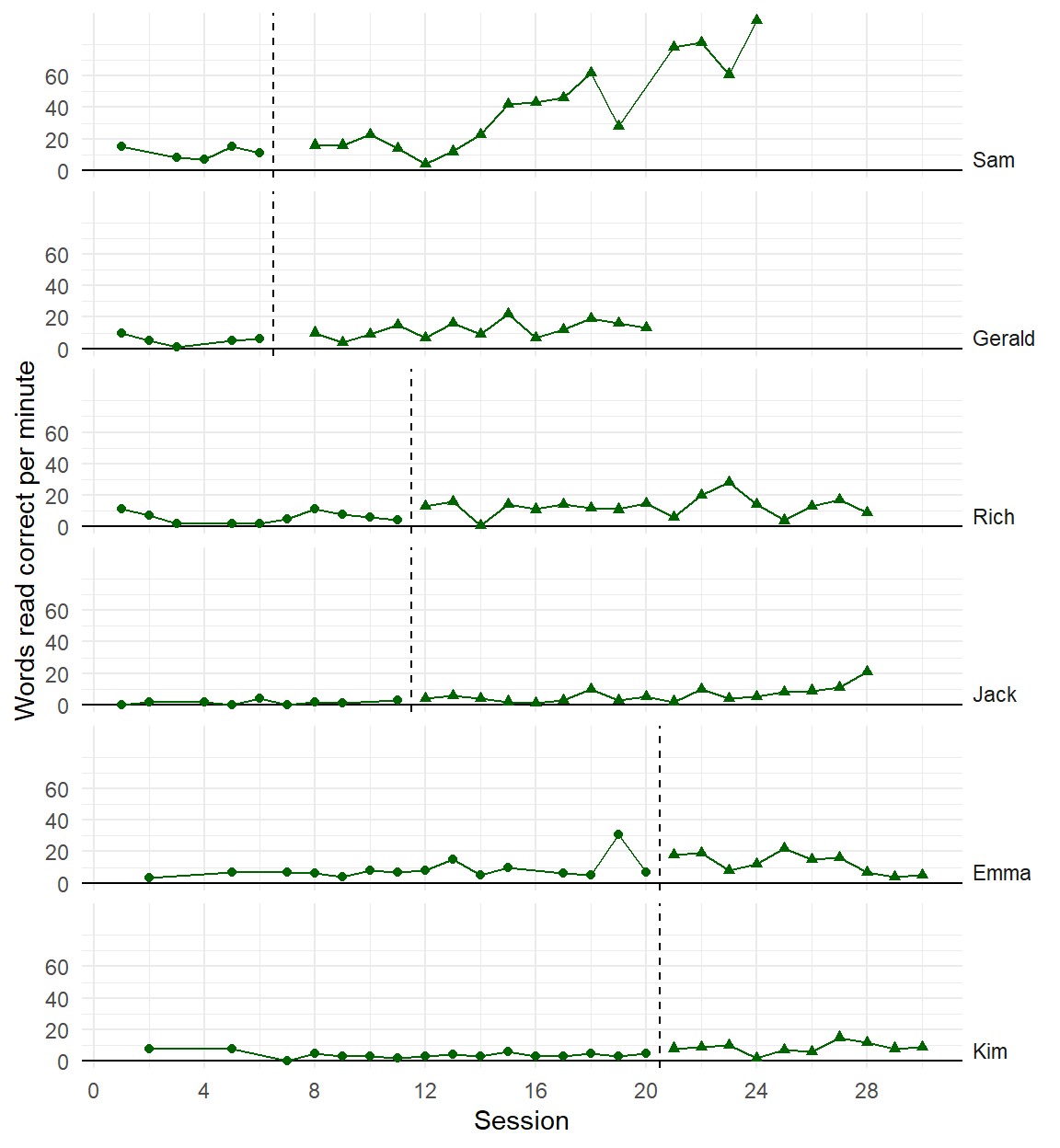

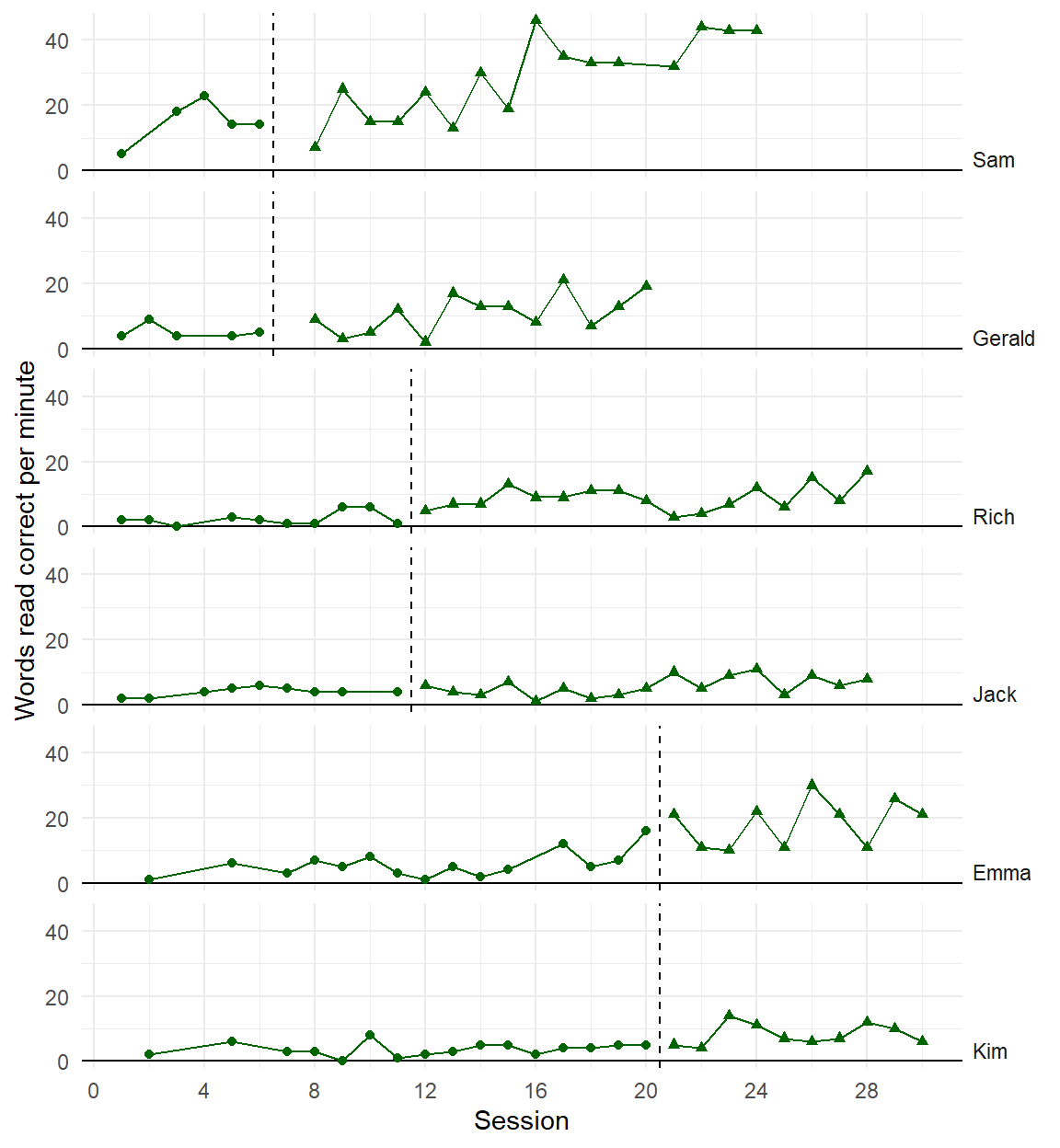

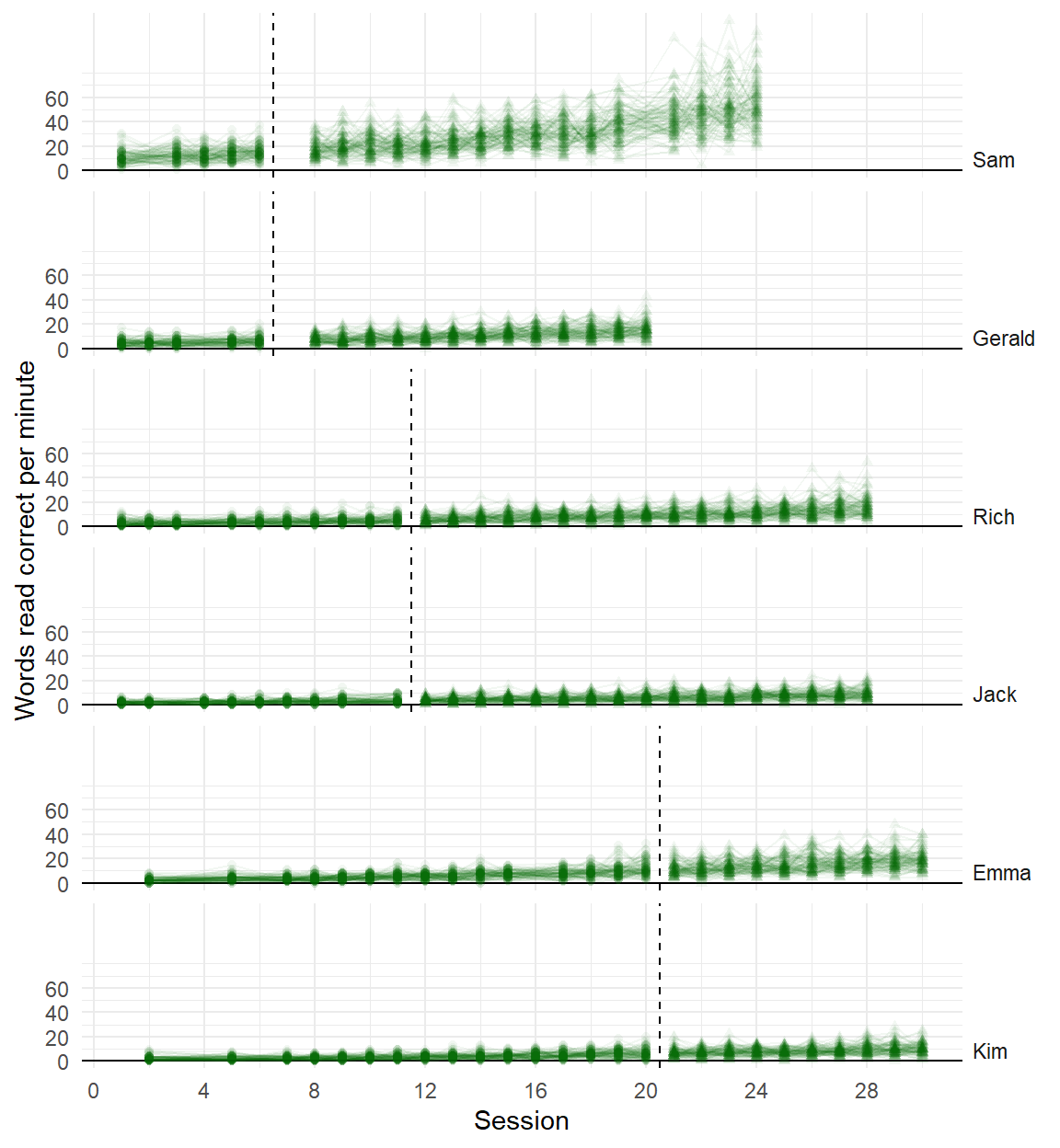

Use the fitted model to simulate artificial data.

Examine the simulated data…

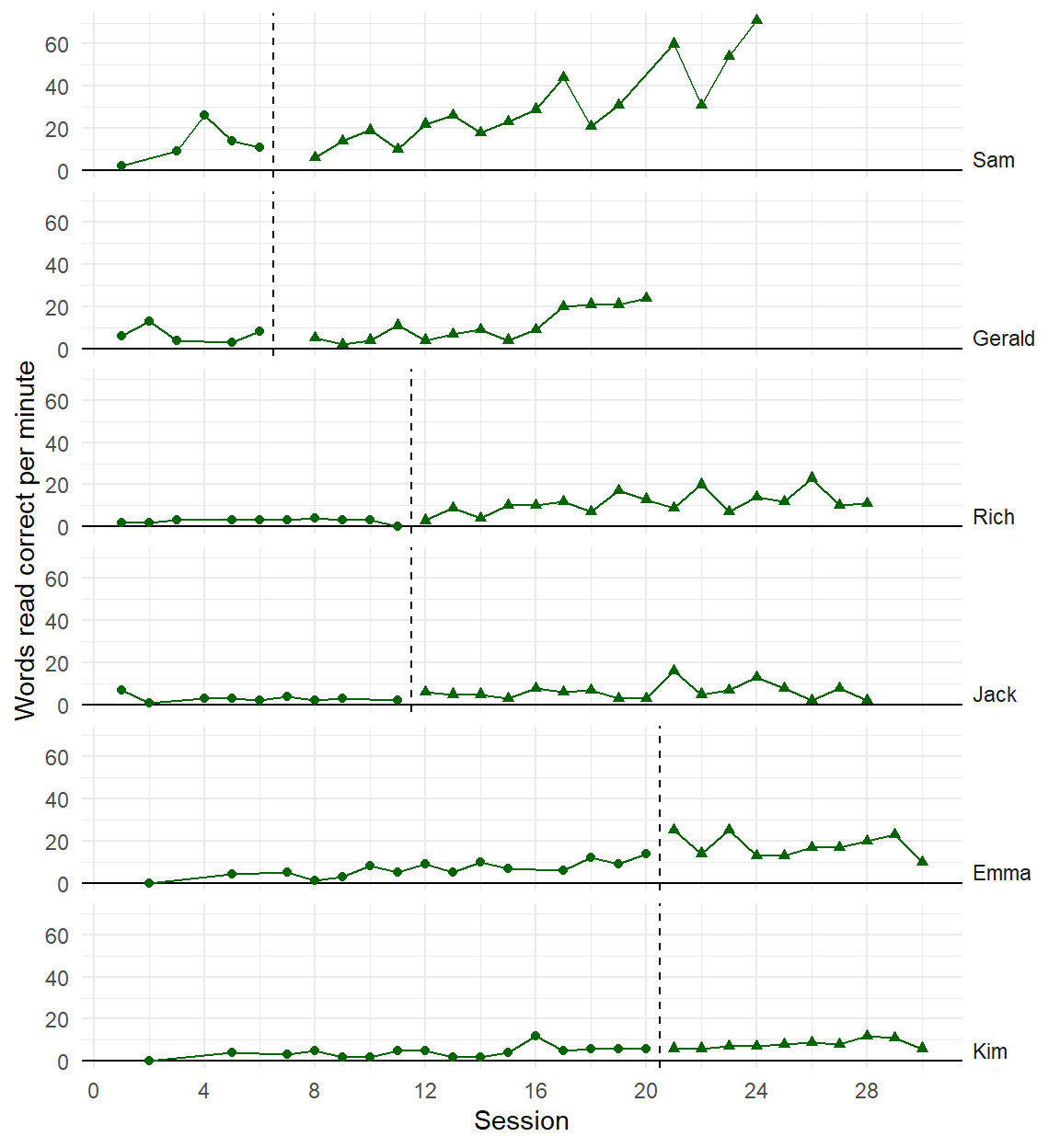

By graphing it just like you would with real data.

By calculating summary statistics for important features.

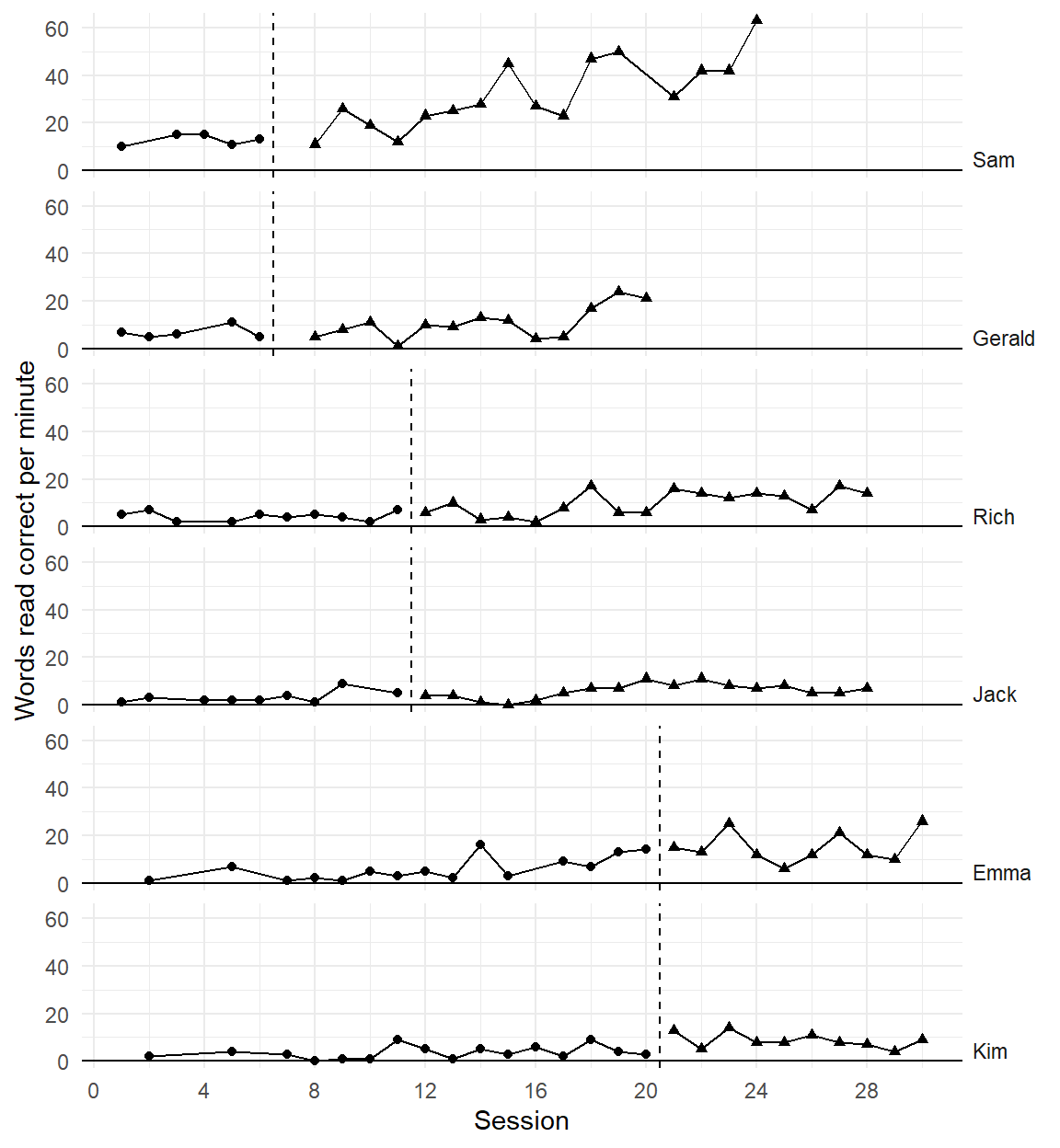

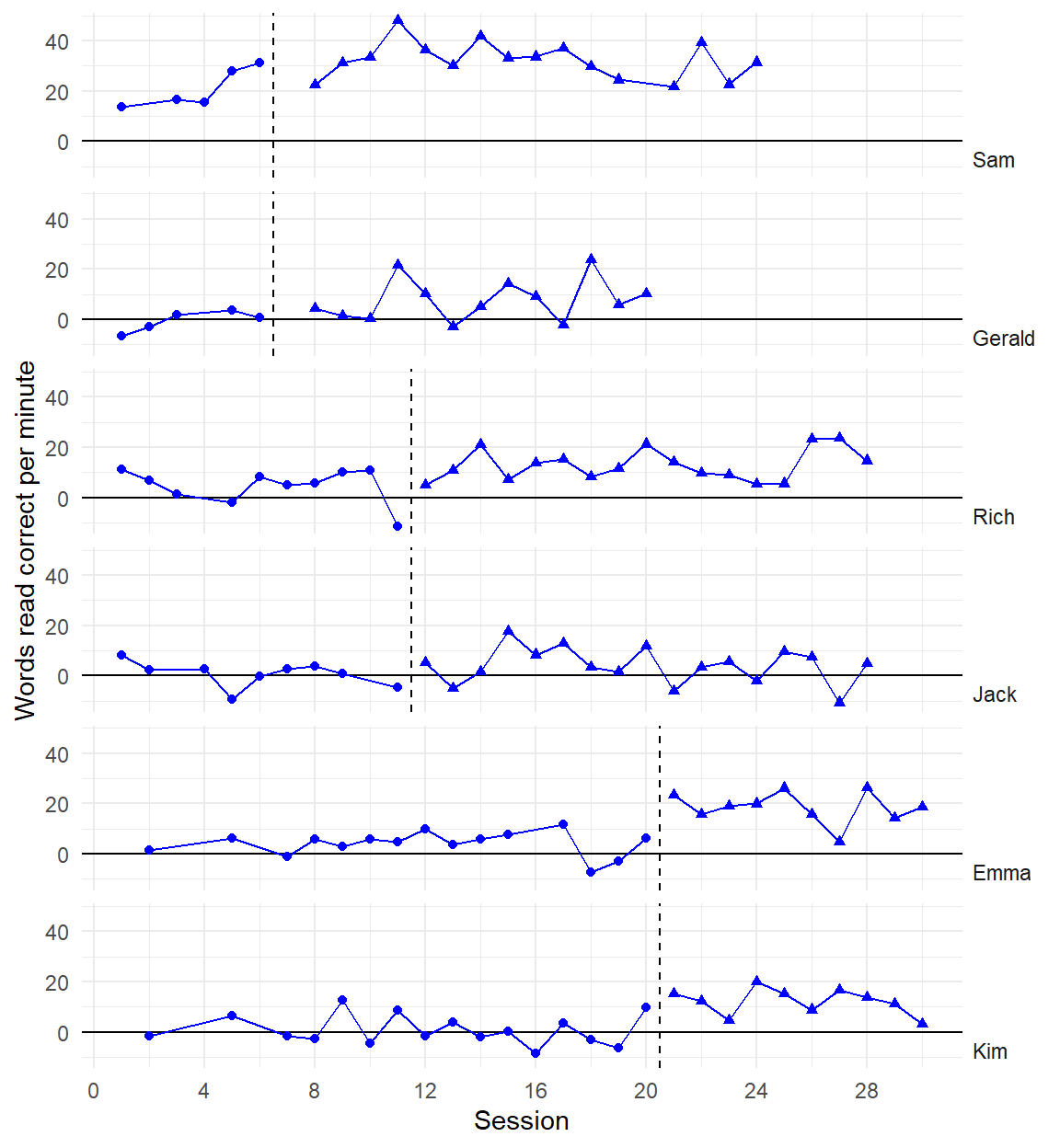

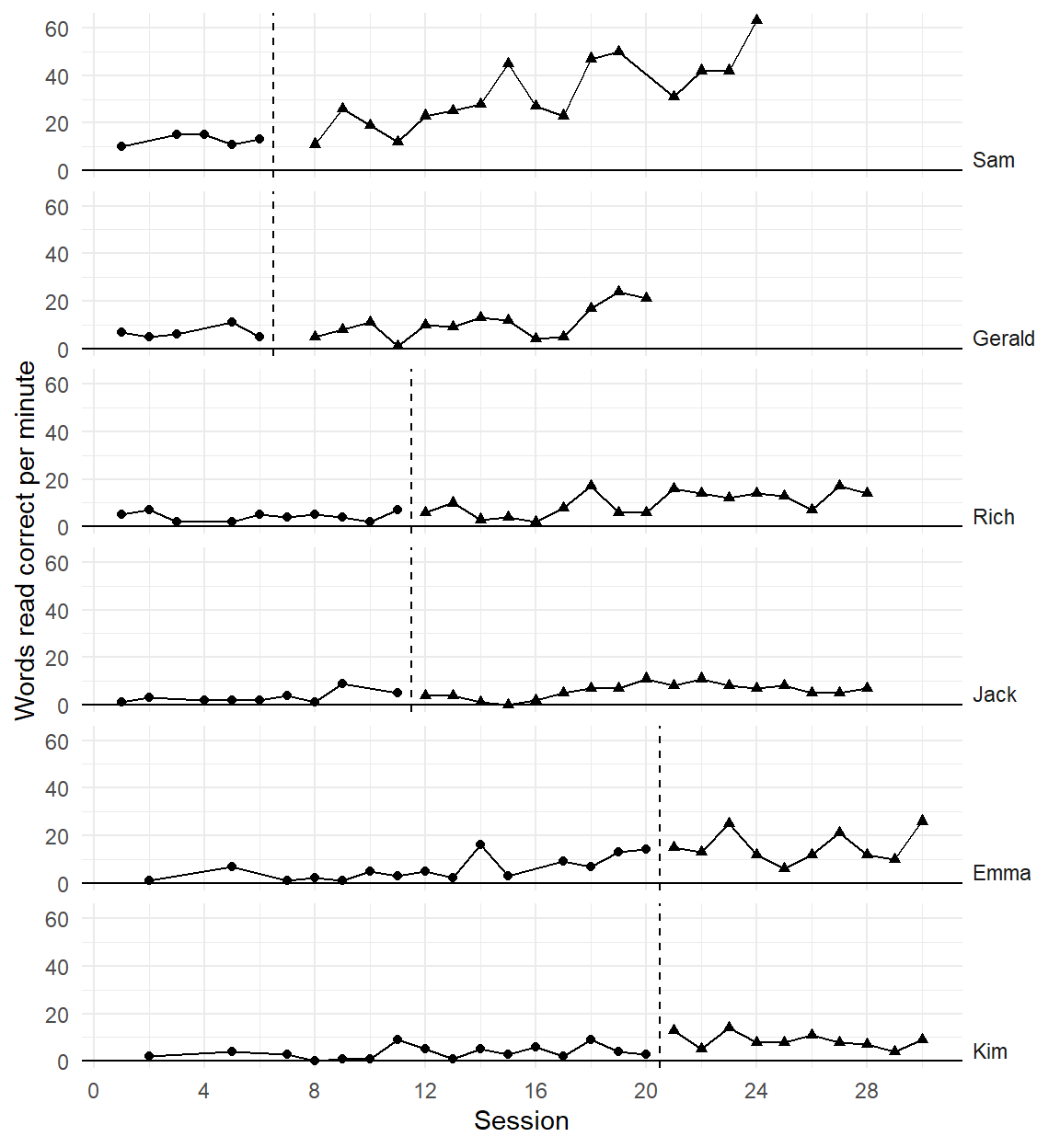

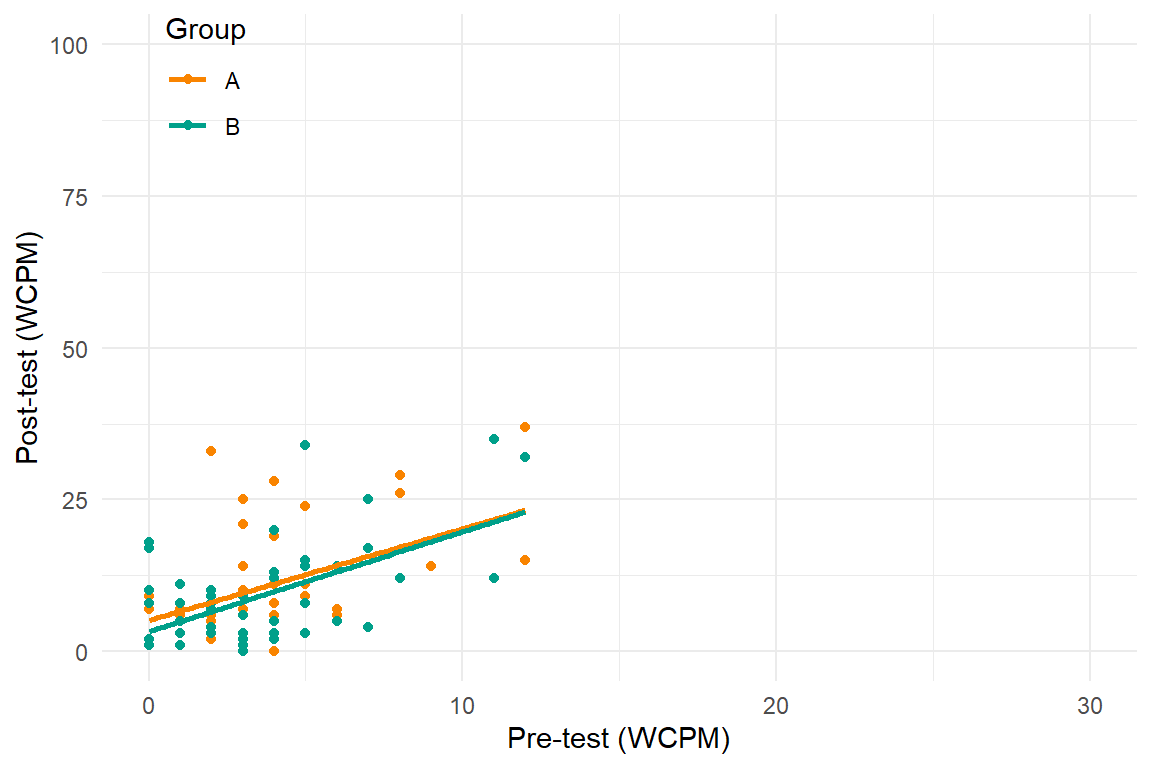

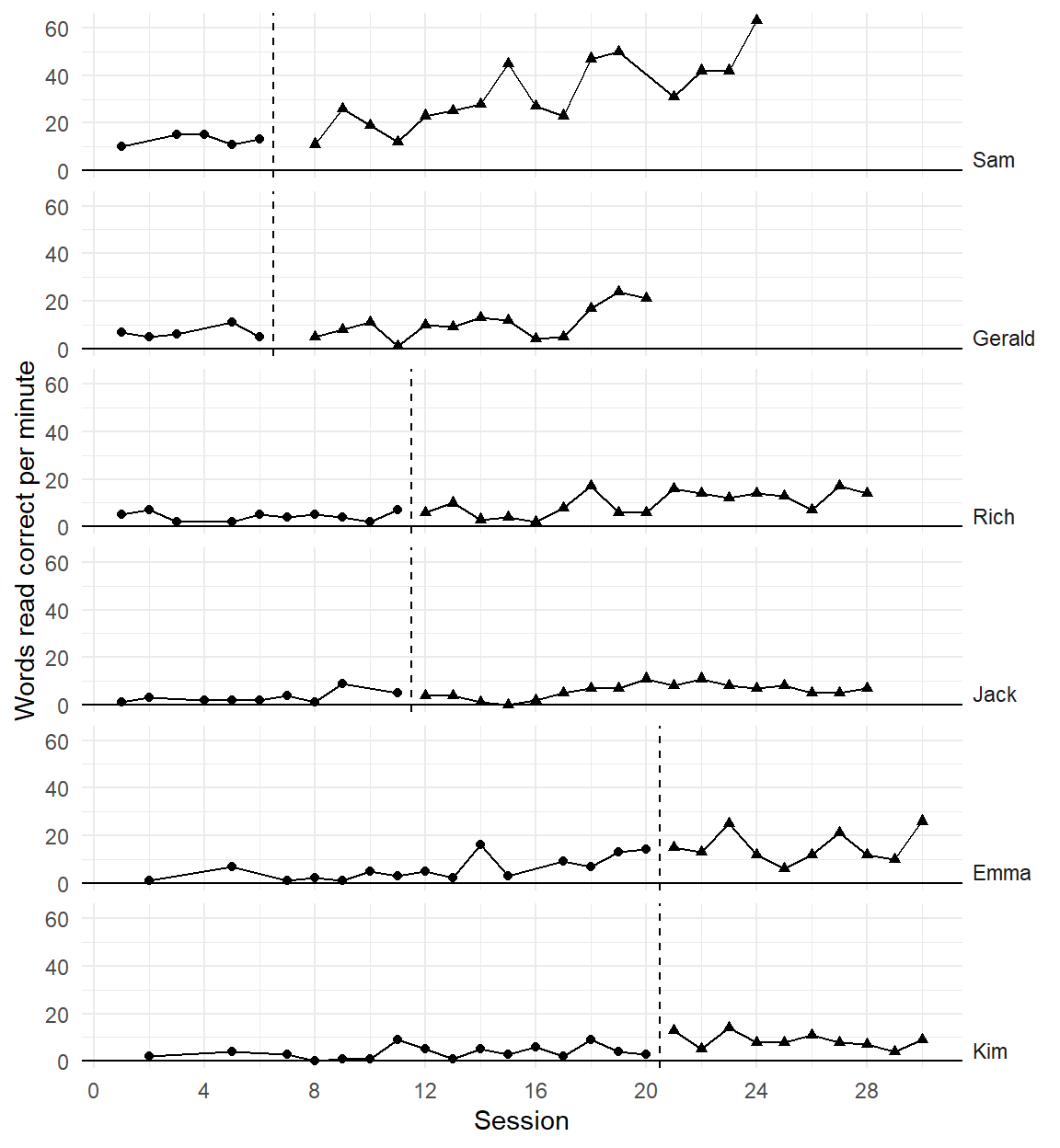

Barton-Arwood, Wehby, and Falk (2005)

Multiple baseline across participant pairs

Third graders with emotional and/or behavioral disabilities.

Horizon Fast-Track reading program and Peer-Assisted Learning Strategies

One-minute oral reading fluency (words read correct)

Three points of comparison

Are the simulated data points plausible considering what you know about the participants, behavior, and study context?

Are the simulated data points similar to the real data?

Are the simulated data points more realistic than data simulated from alternative models?

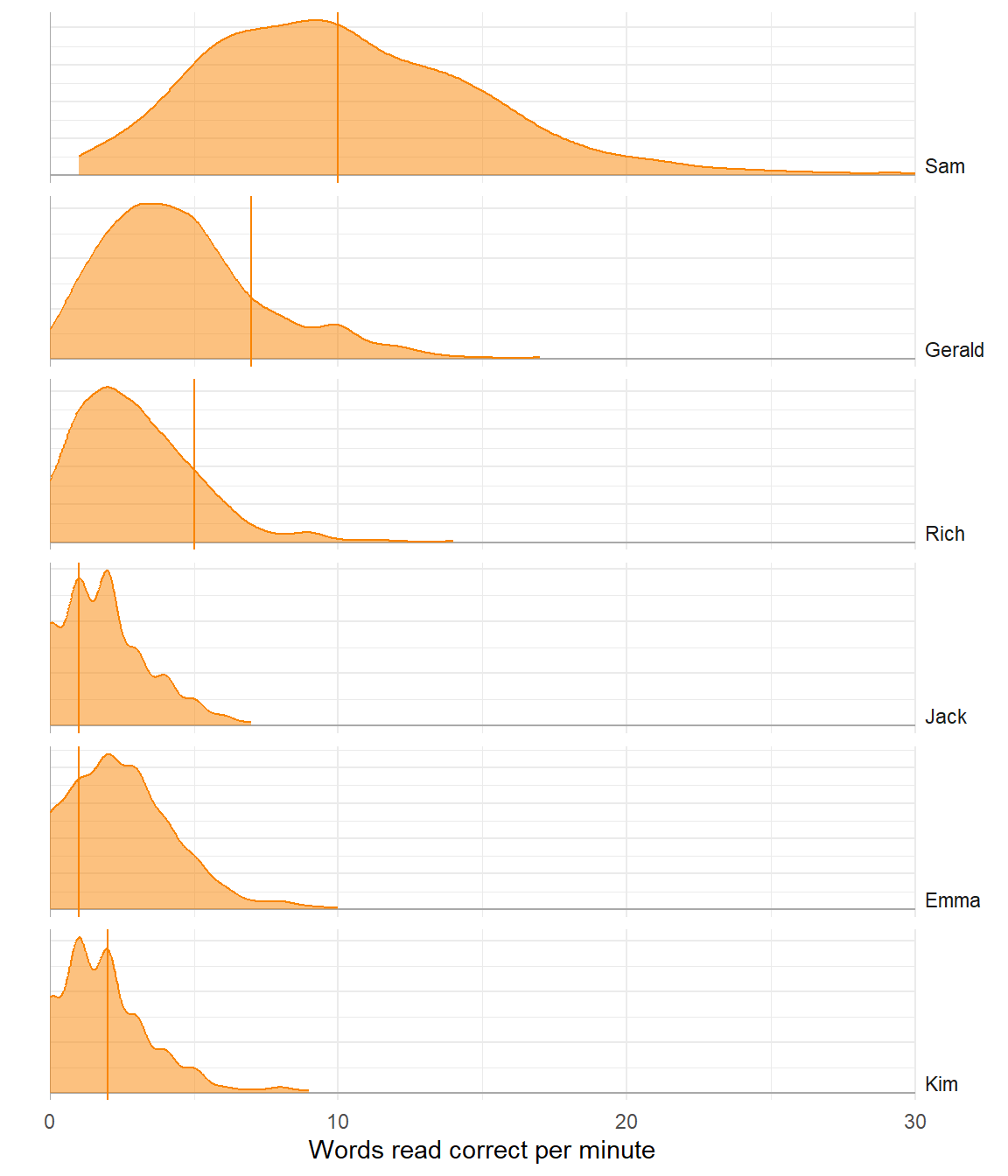

Summary Statistics

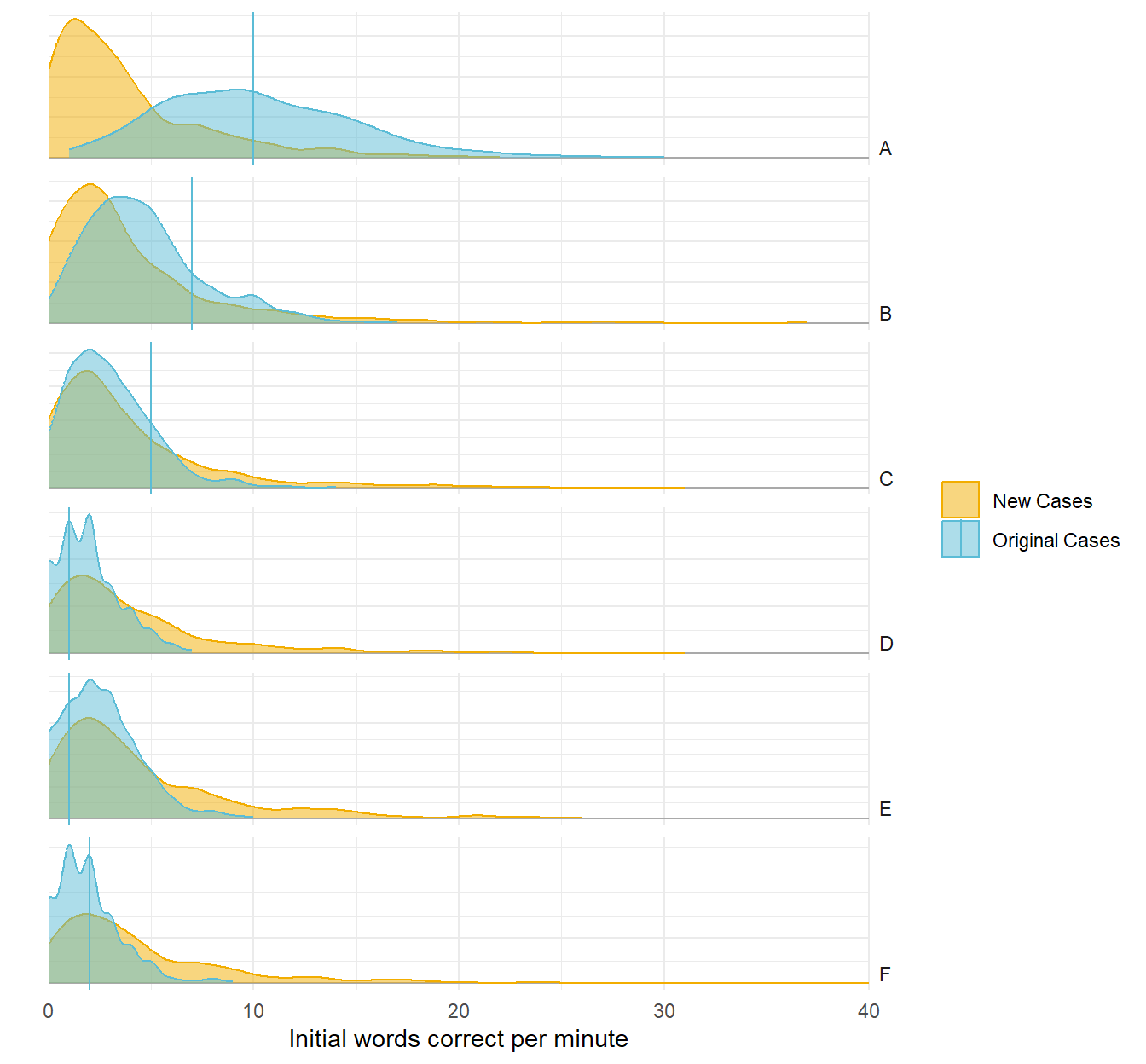

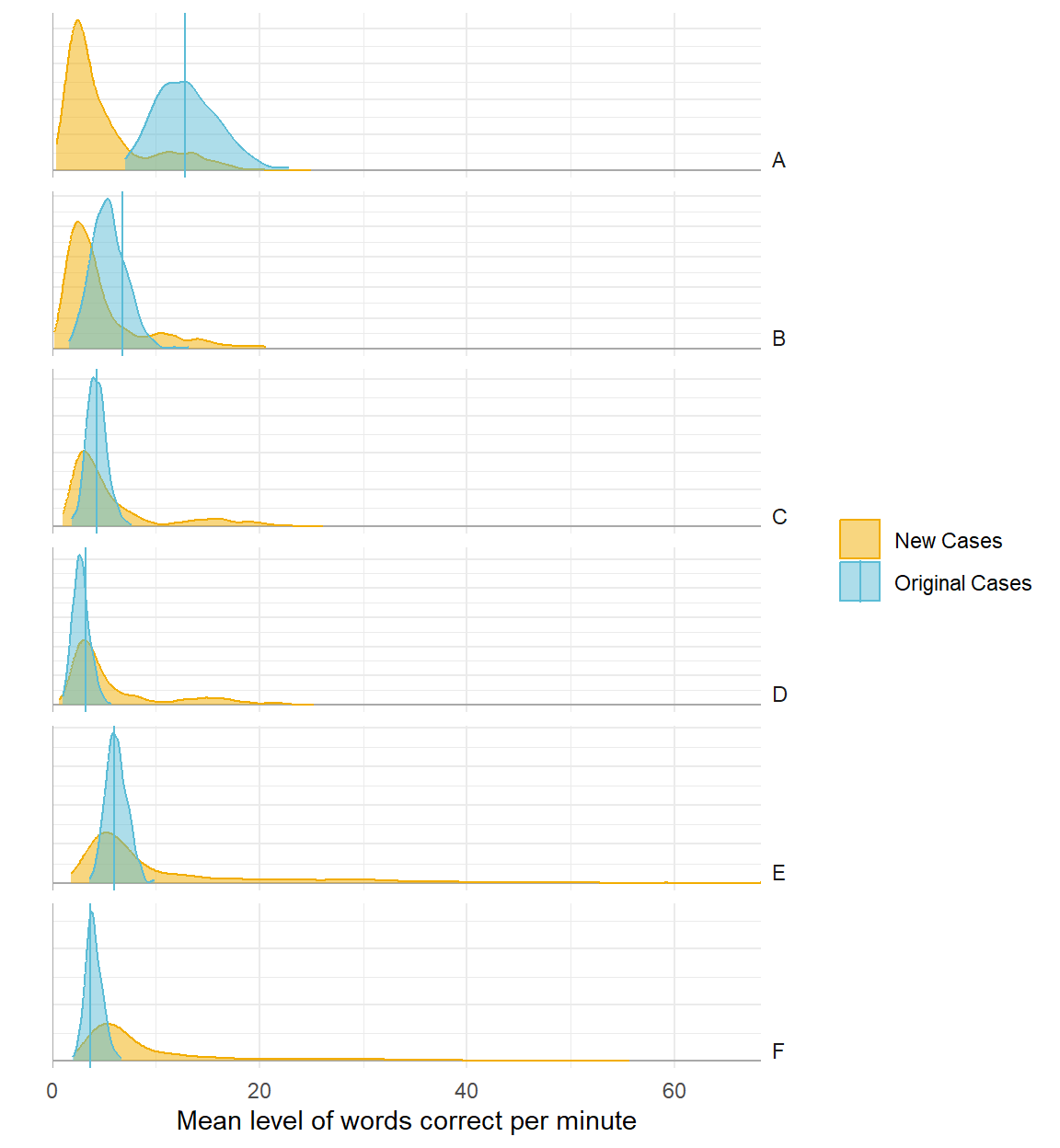

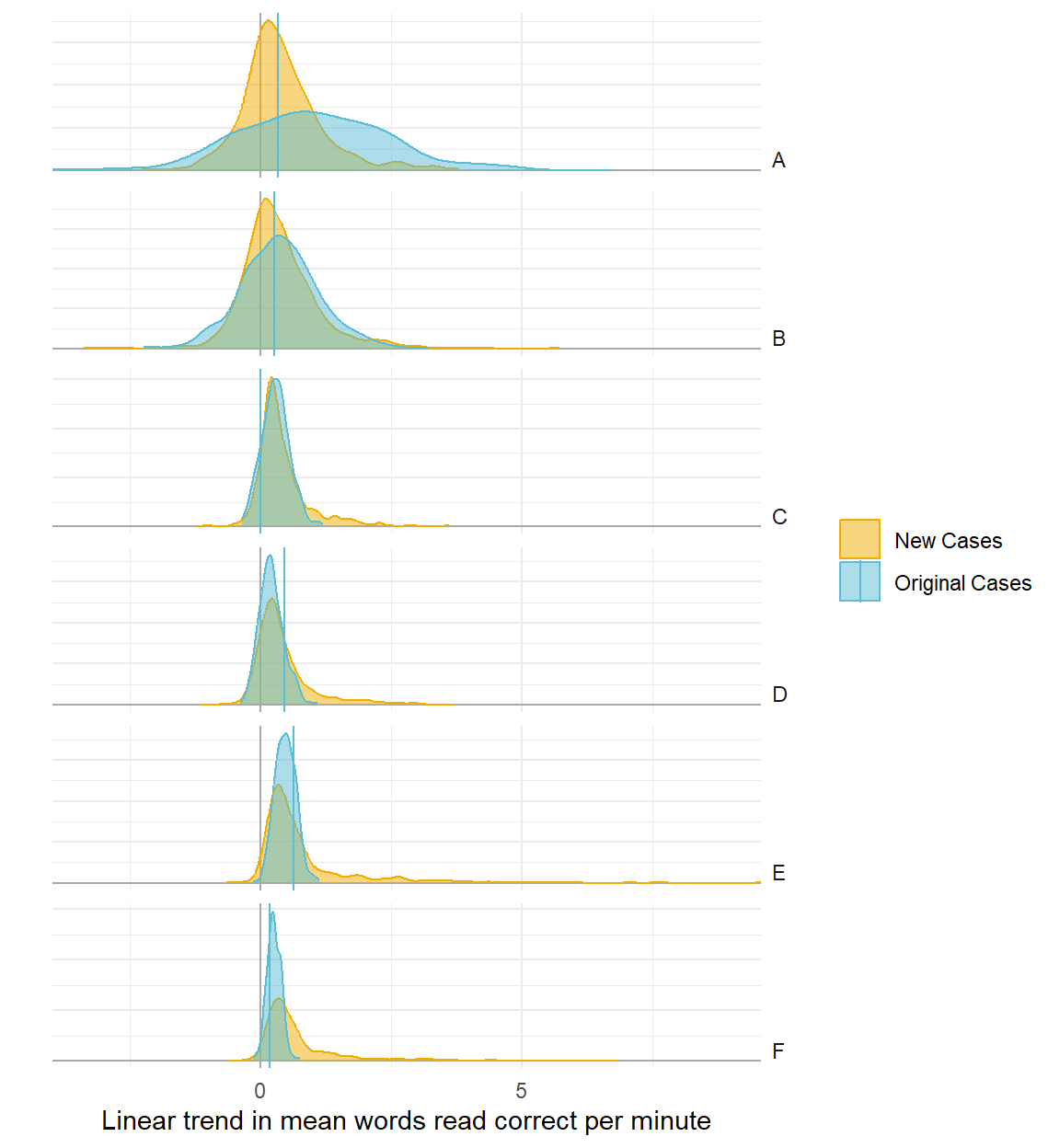

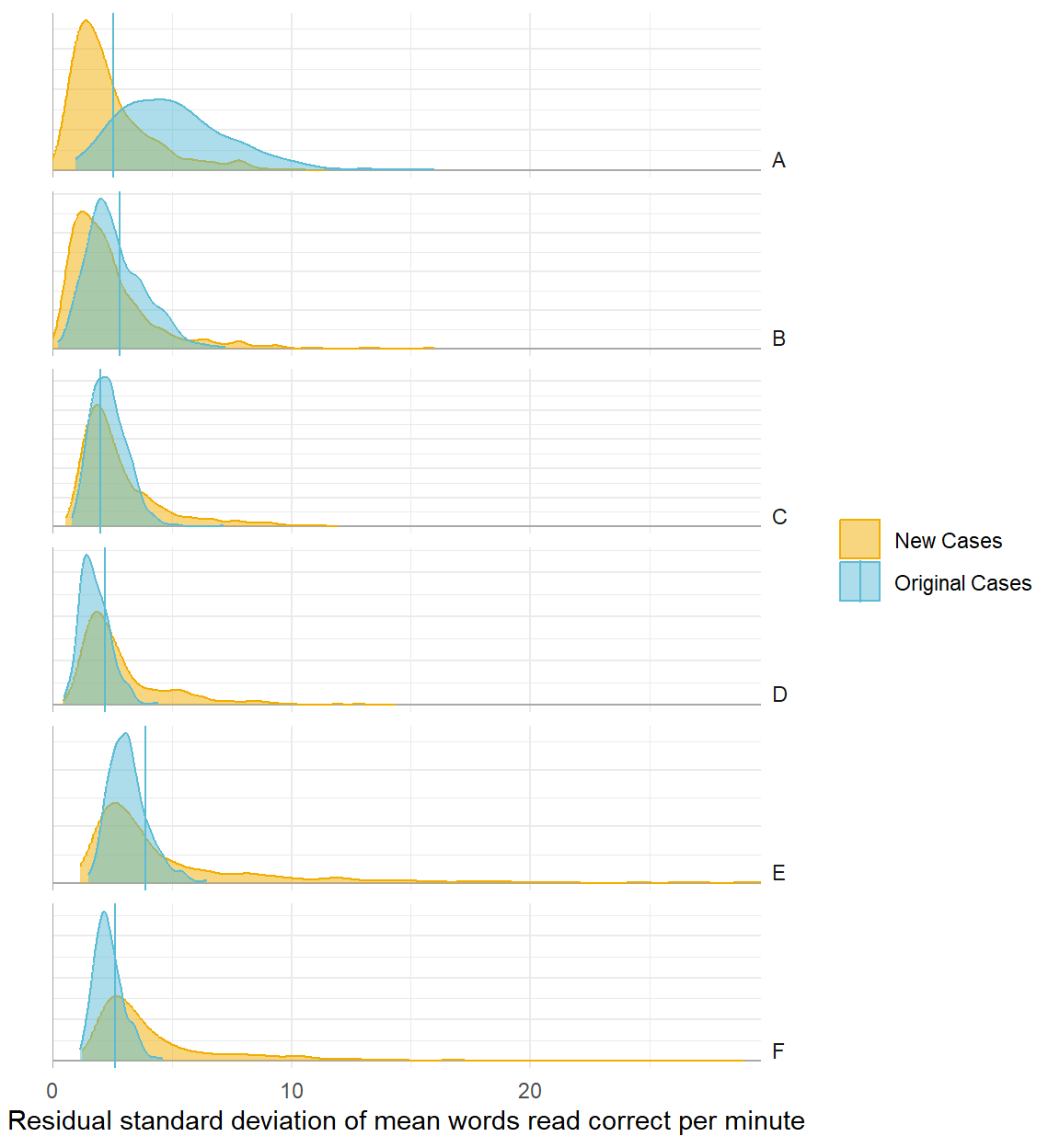

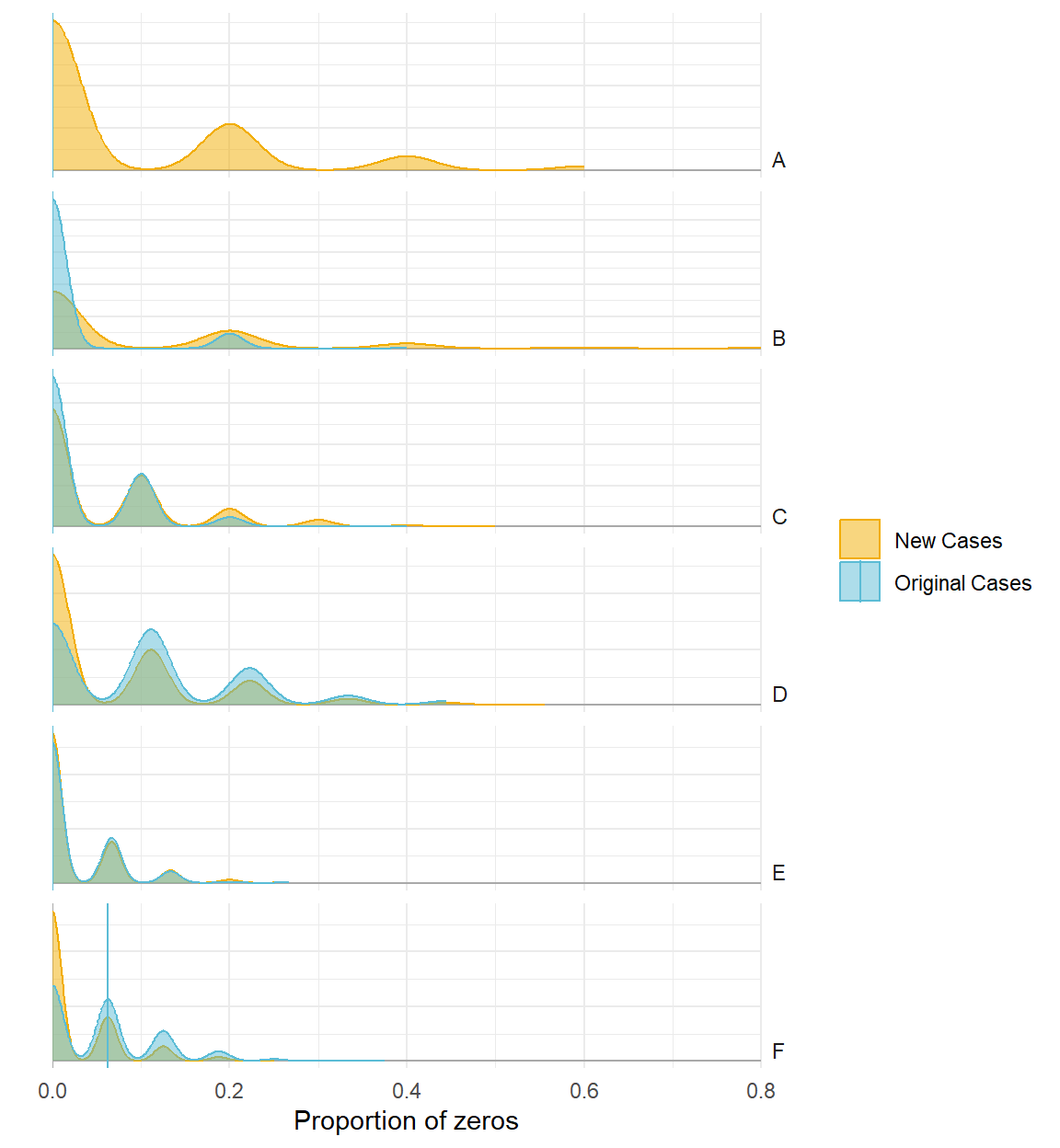

- Examining summary statistics allows us to focus on and isolate specific important visual features of each data series.

But which summary statistics?

Initial performance: First baseline observation

Level: Mean/median of each phase

Trend: Slope from linear regression (Manolov, Lebrault, and Krasny-Pacini 2024)

Variability: SD of each phase

Extinction: Proportion of zeros (Scotti et al. 1991)

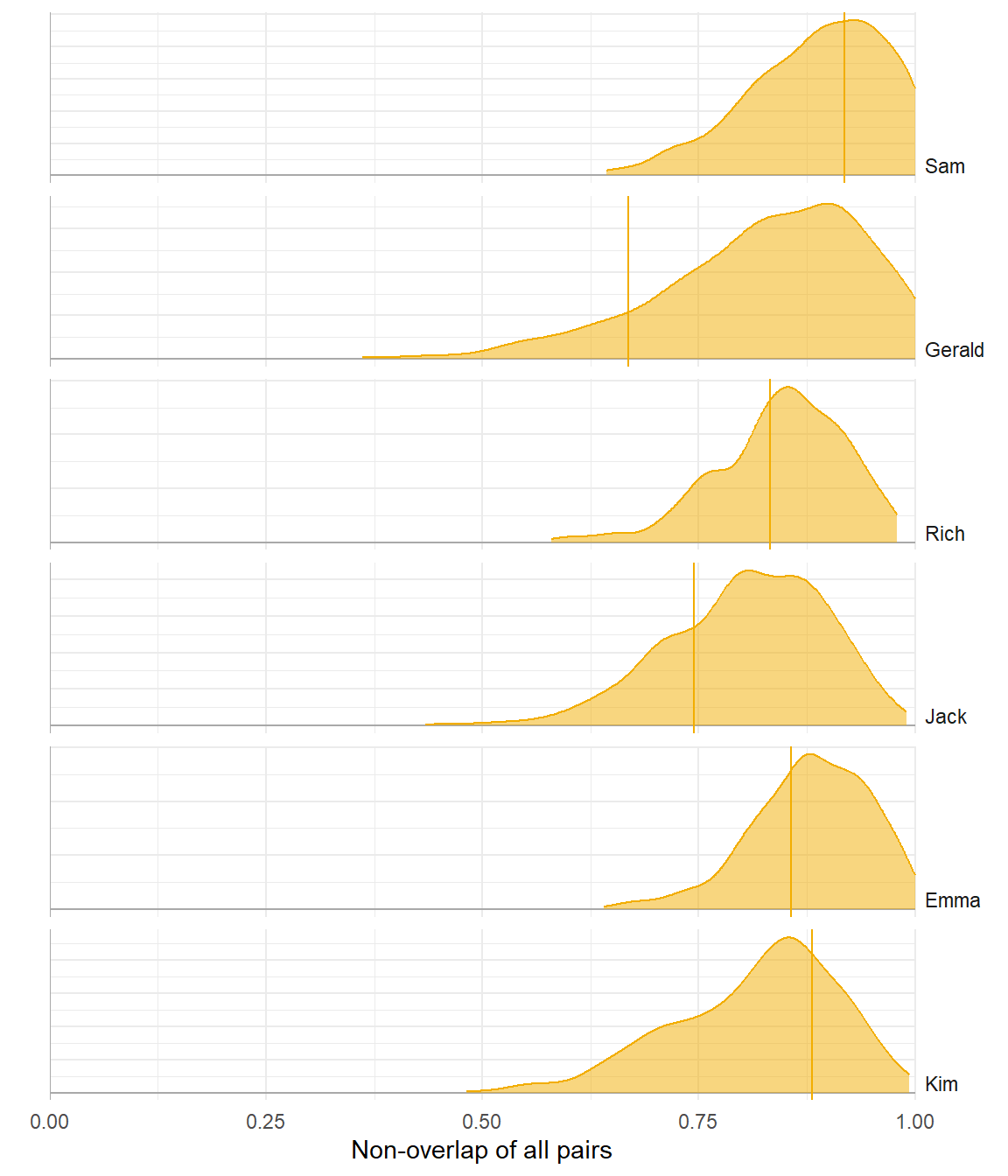

Overlap: Non-overlap of all pairs (Parker and Vannest 2009)

Immediacy: Fine-grained effects (Ferron, Kirby, and Lipien 2024)

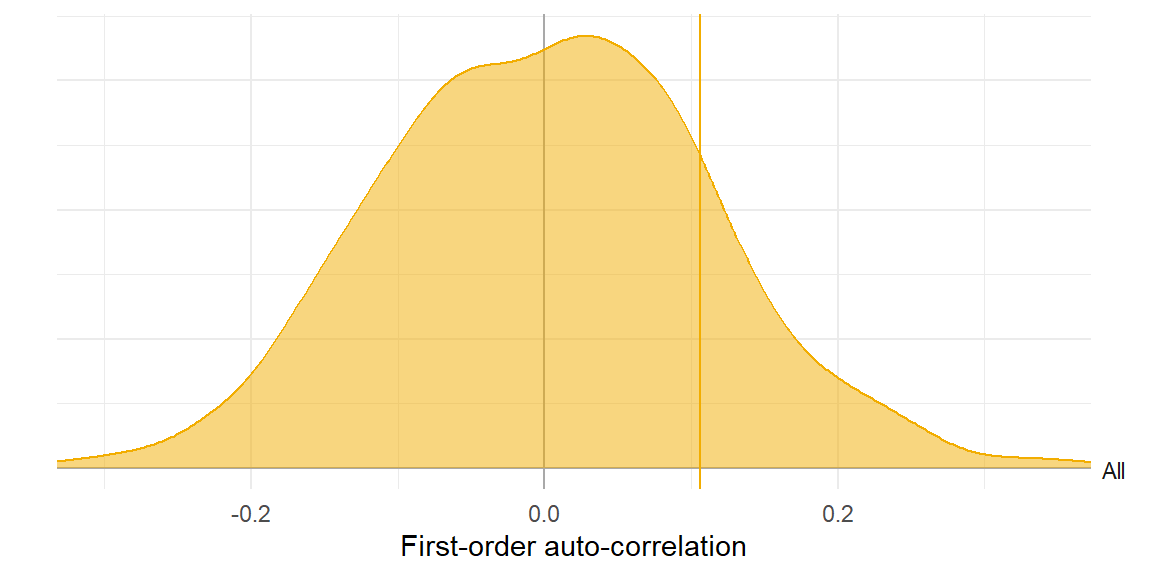

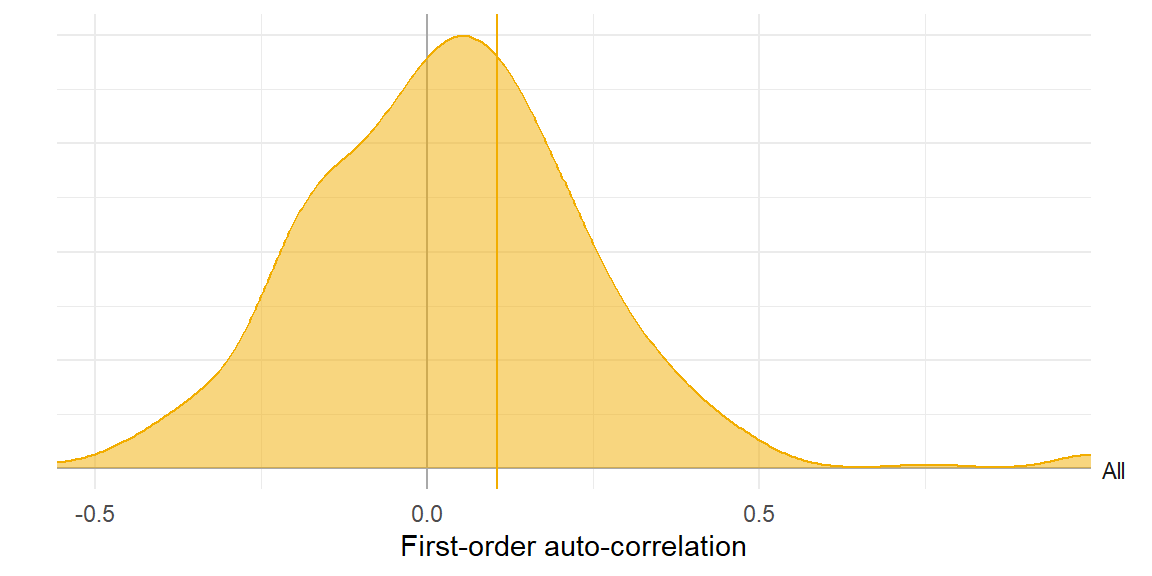

Auto-correlation: First-order auto-correlation estimate (Busk and Marascuilo 1988; Matyas and Greenwood 1996)

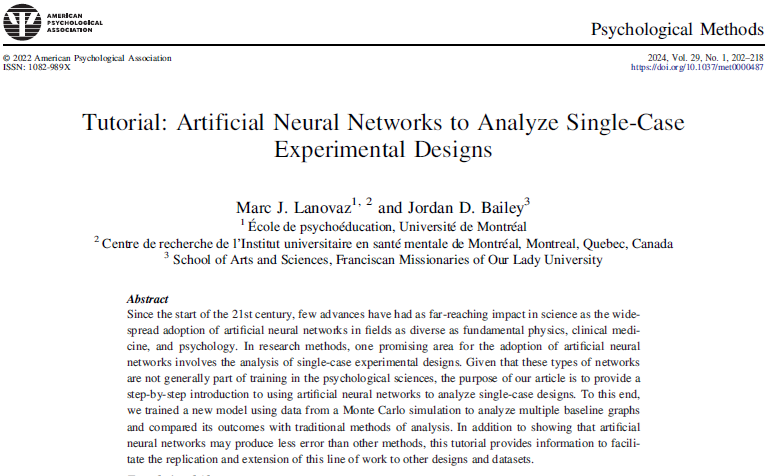

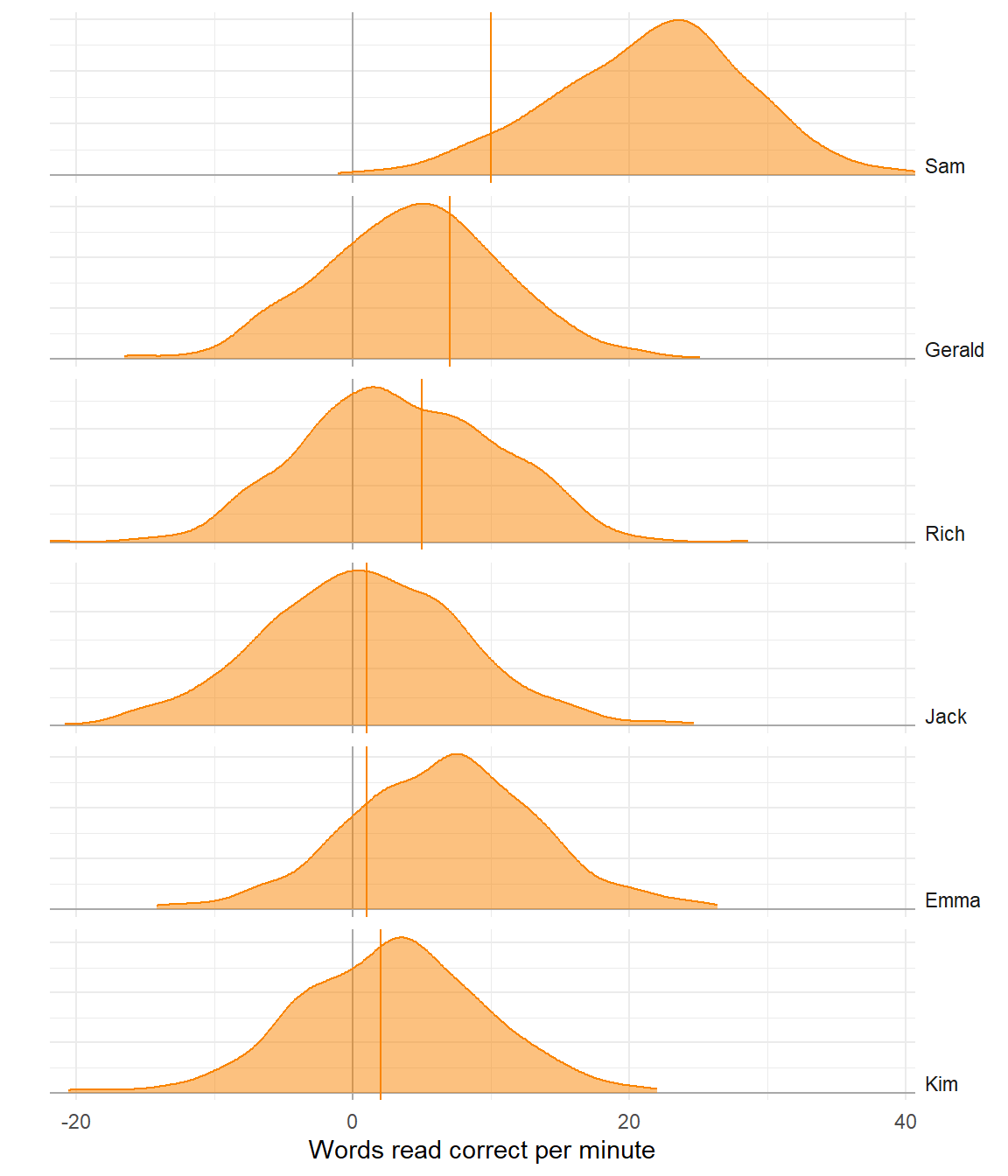

Initial observation

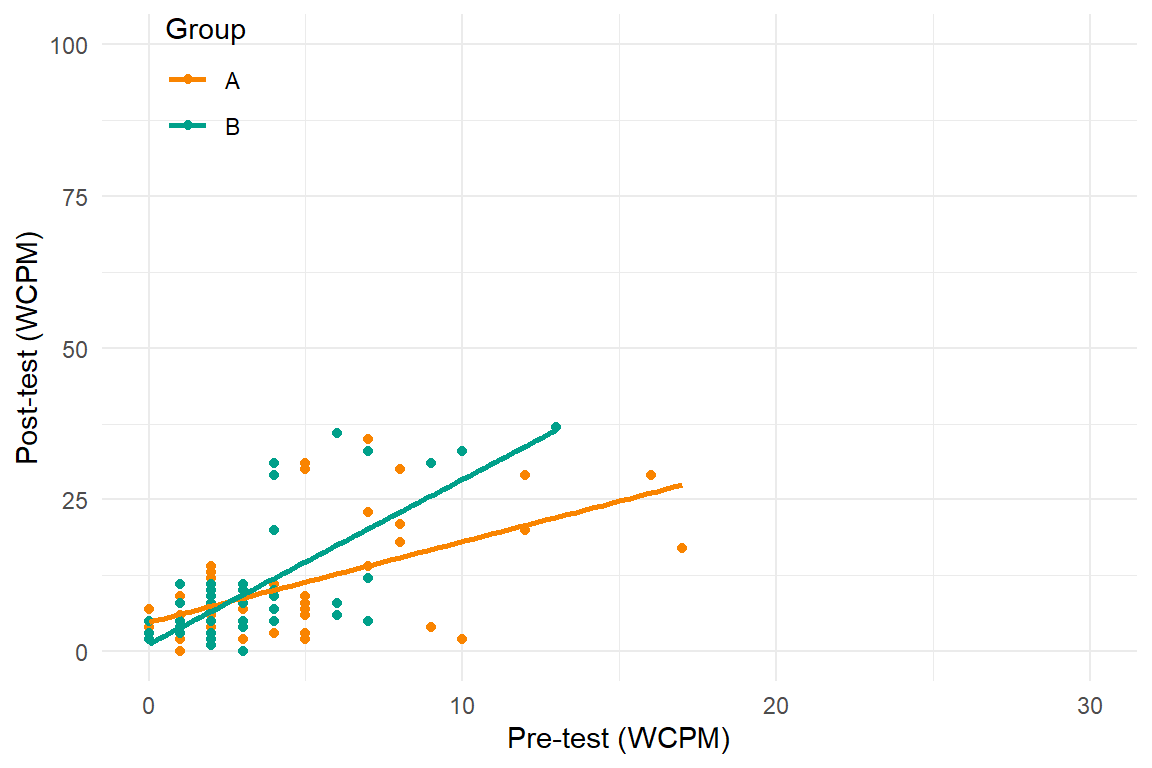

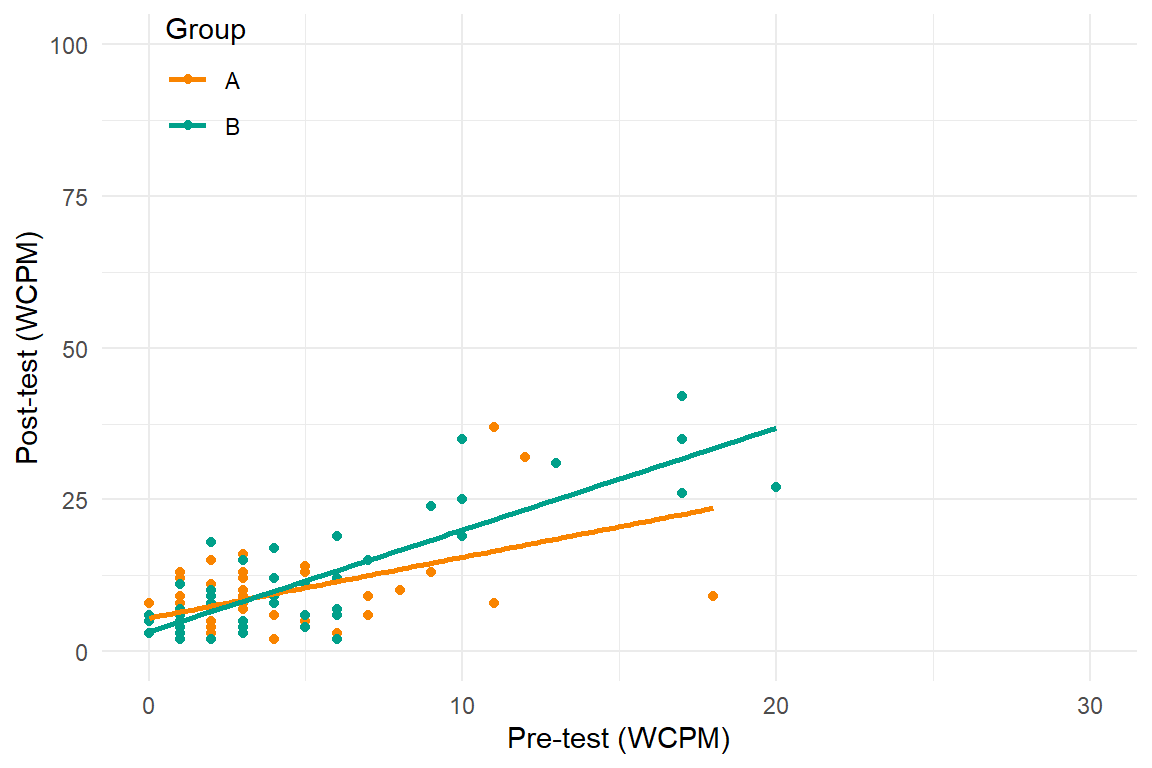

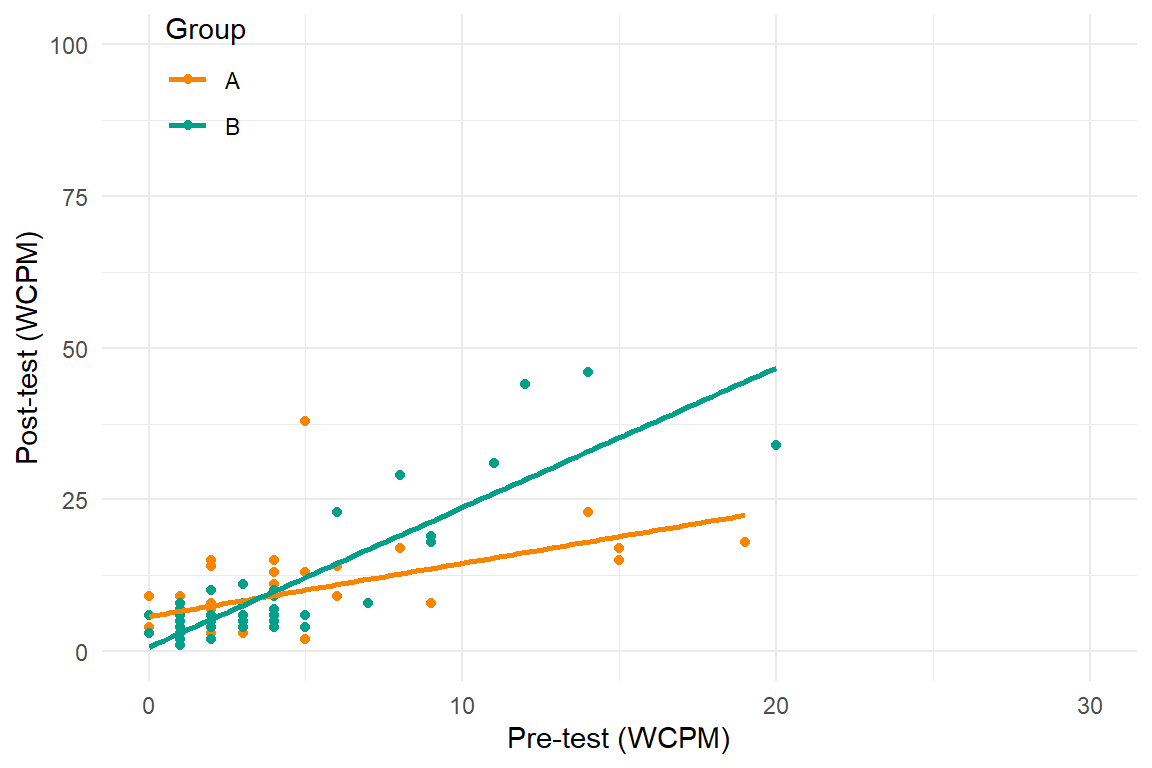

Poor model

Better model

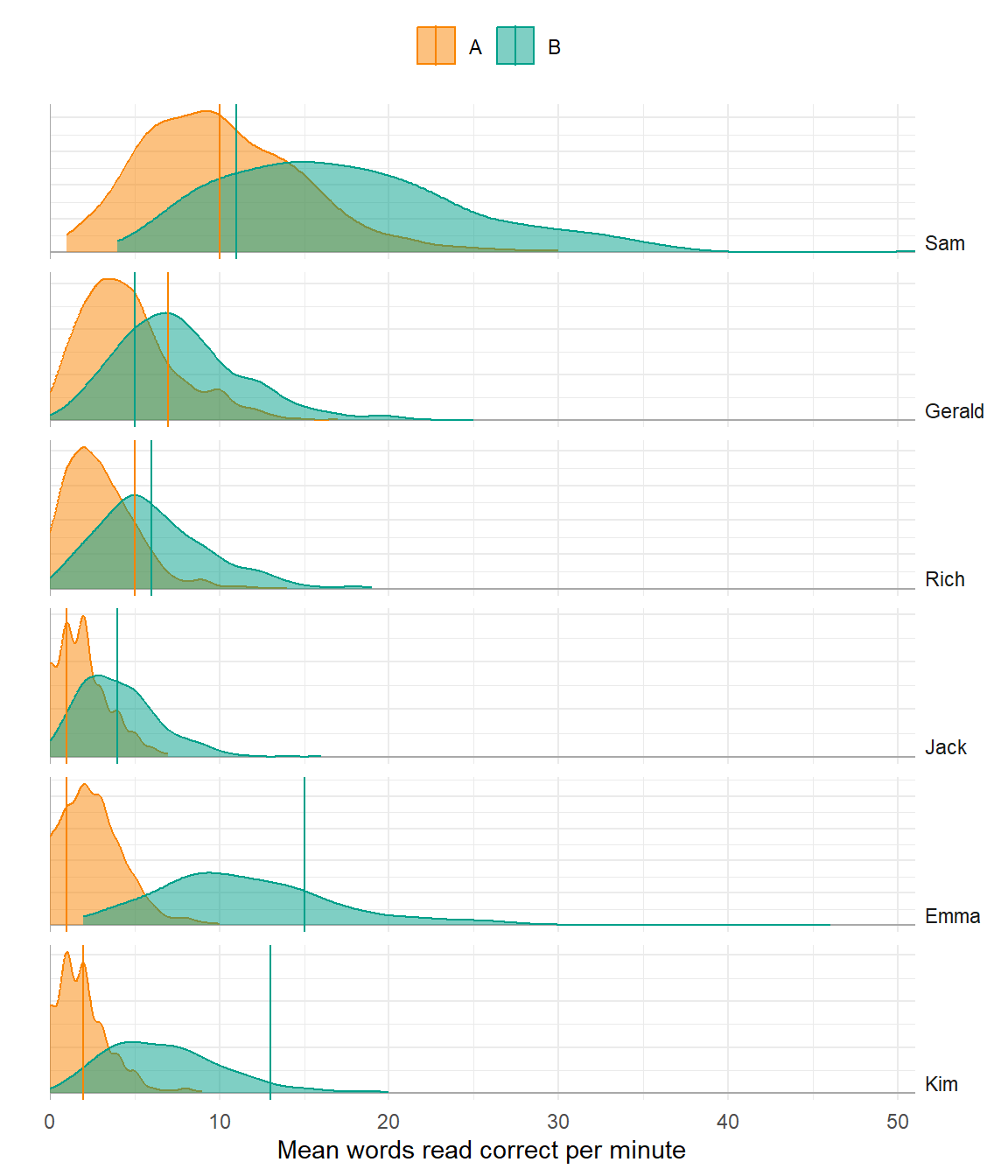

Level

Poor model

Better model

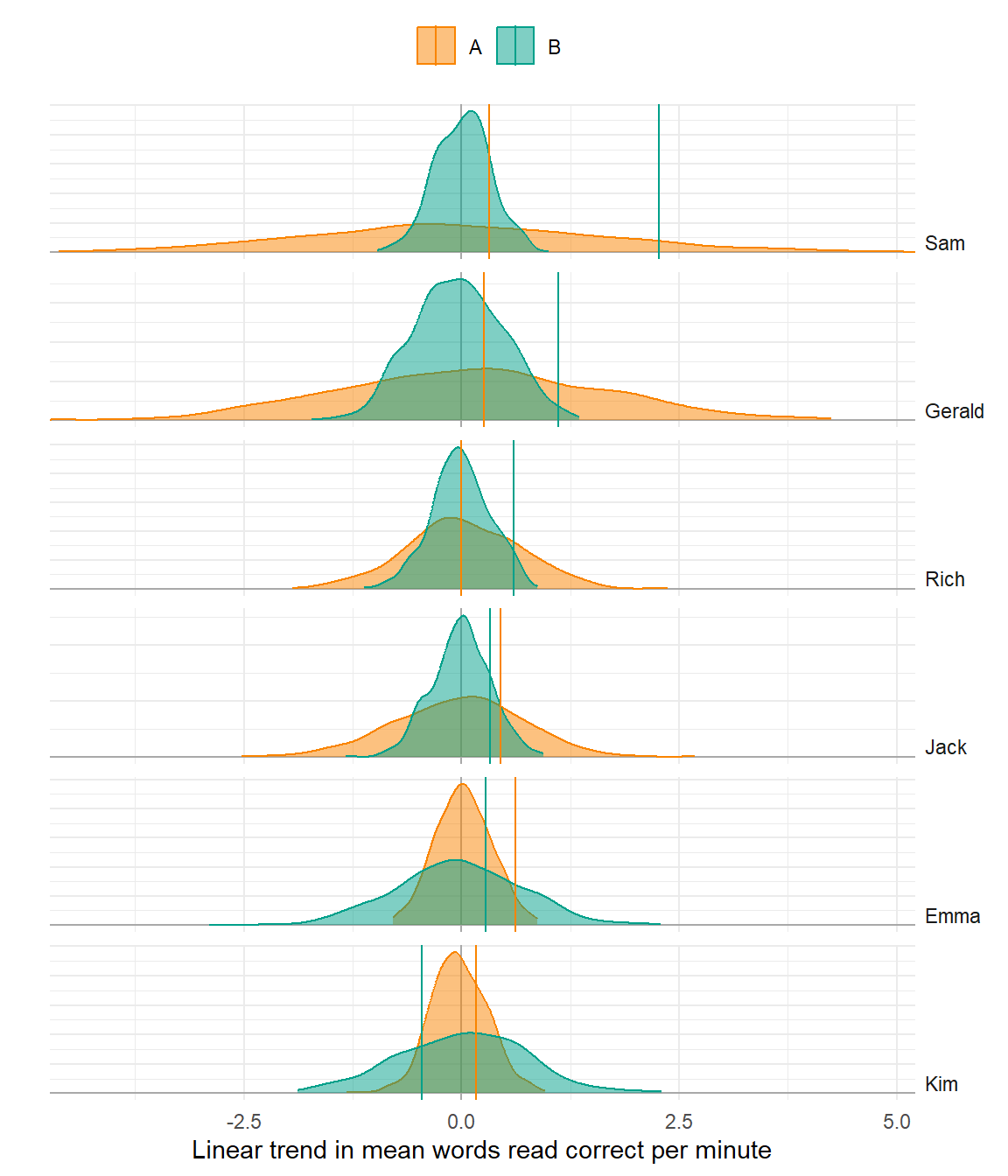

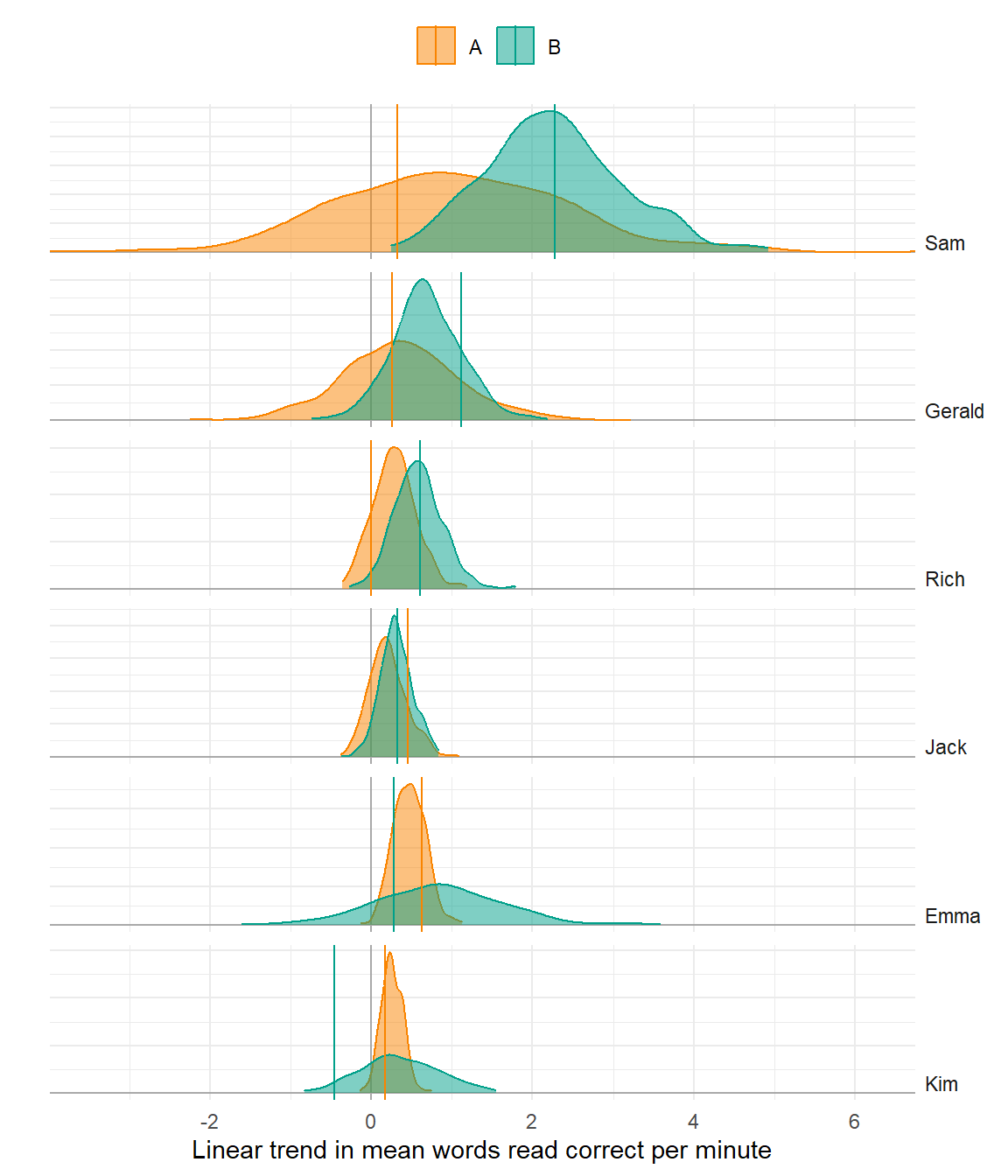

Trend

Poor model

Better model

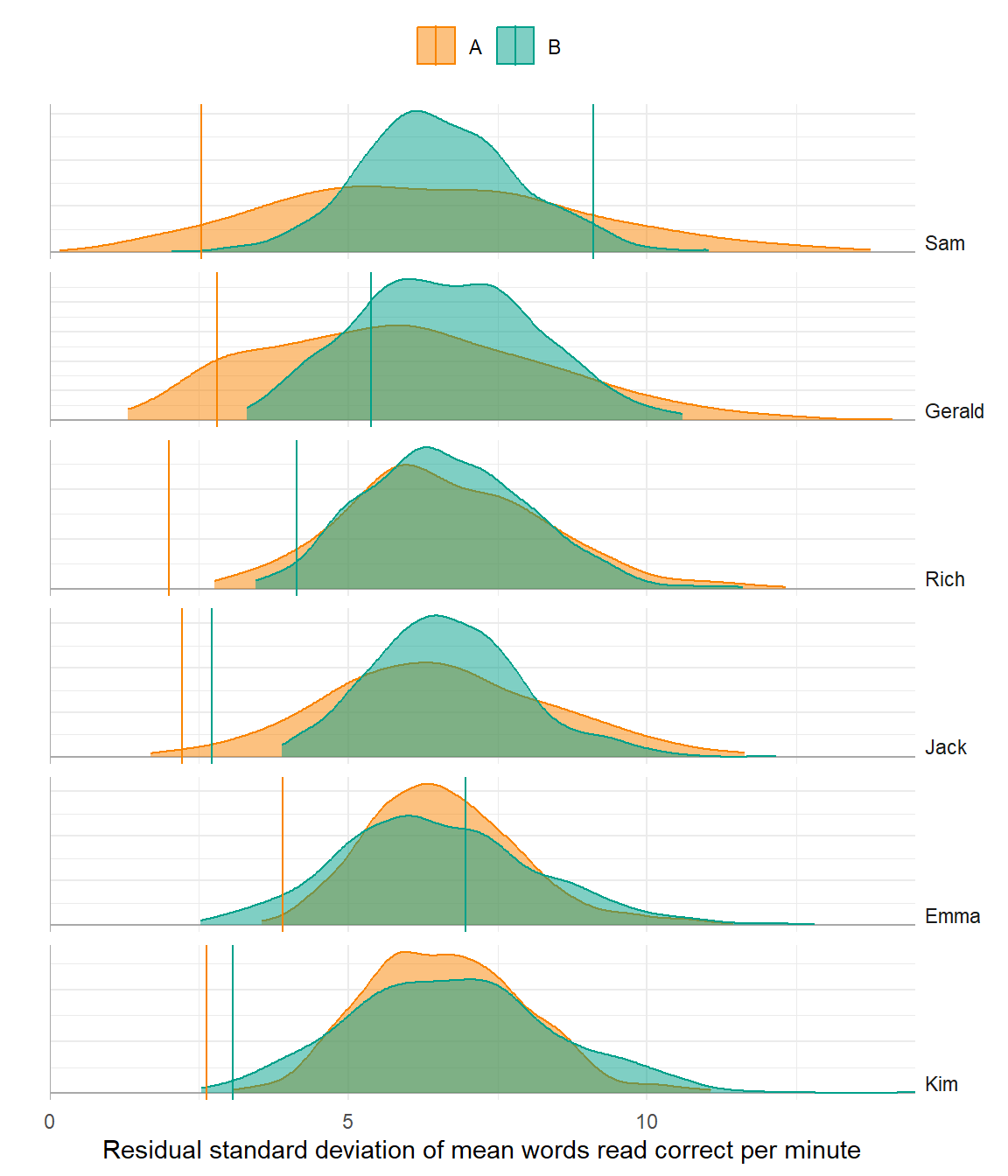

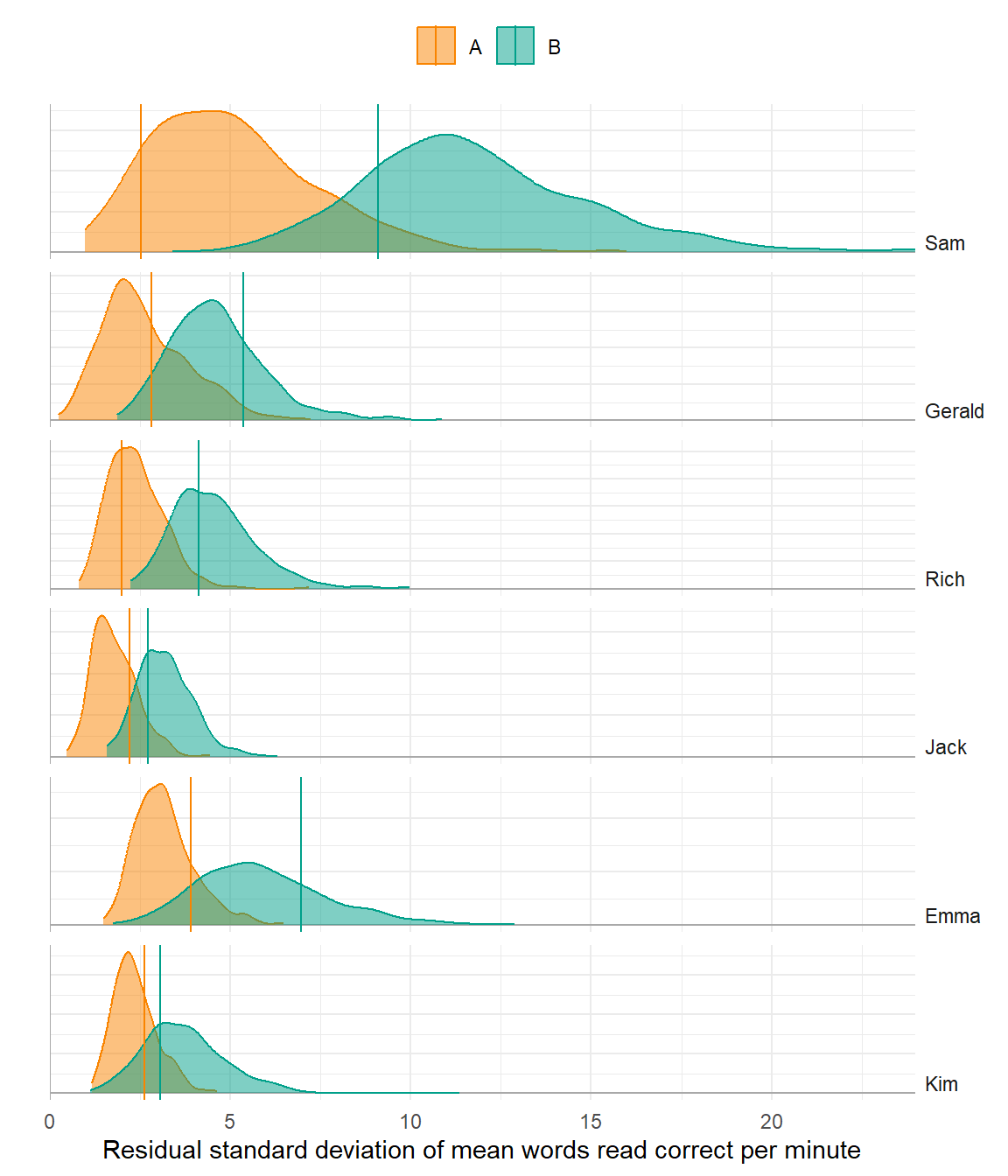

Variability

Poor model

Better model

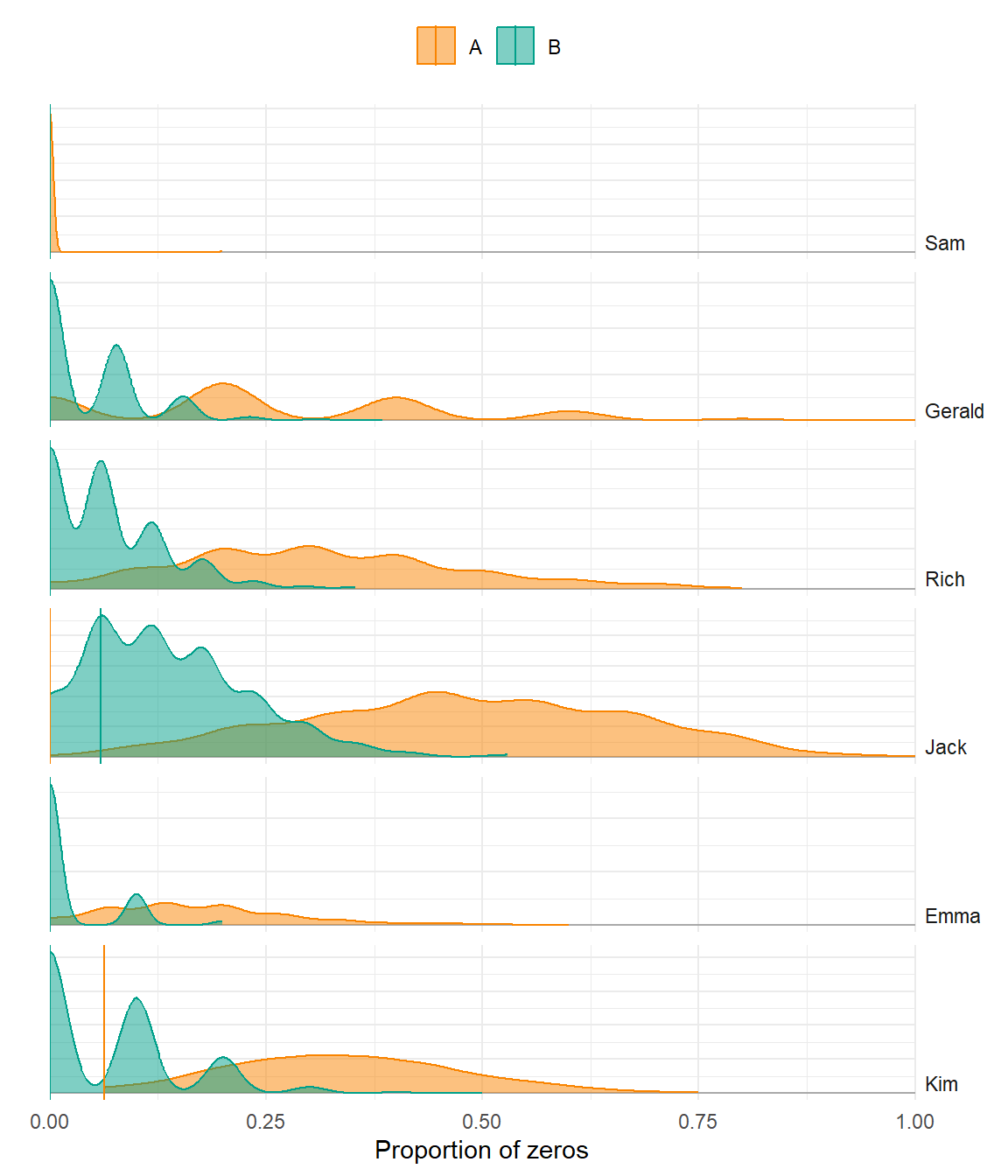

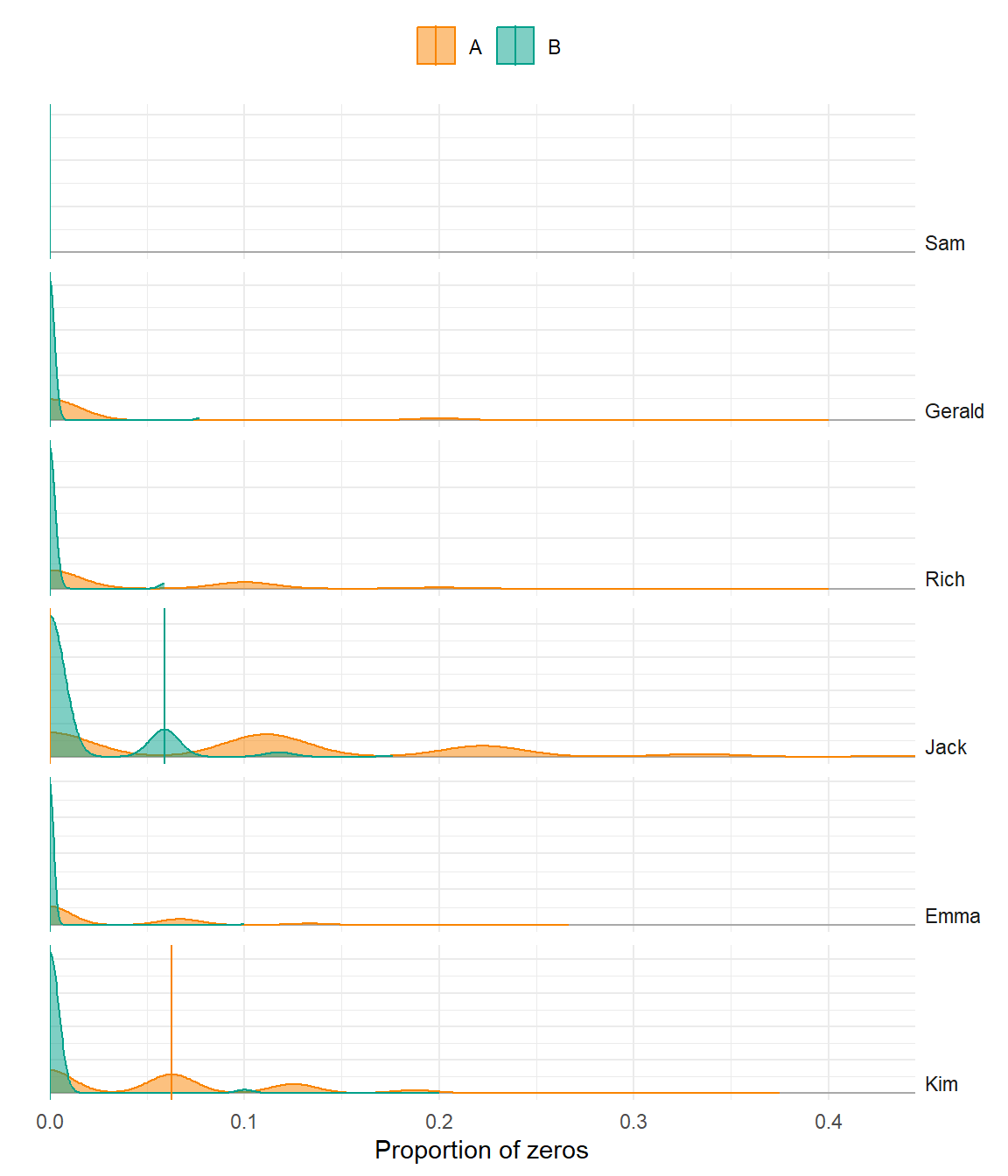

Percentage of Zeros

Poor model

Better model

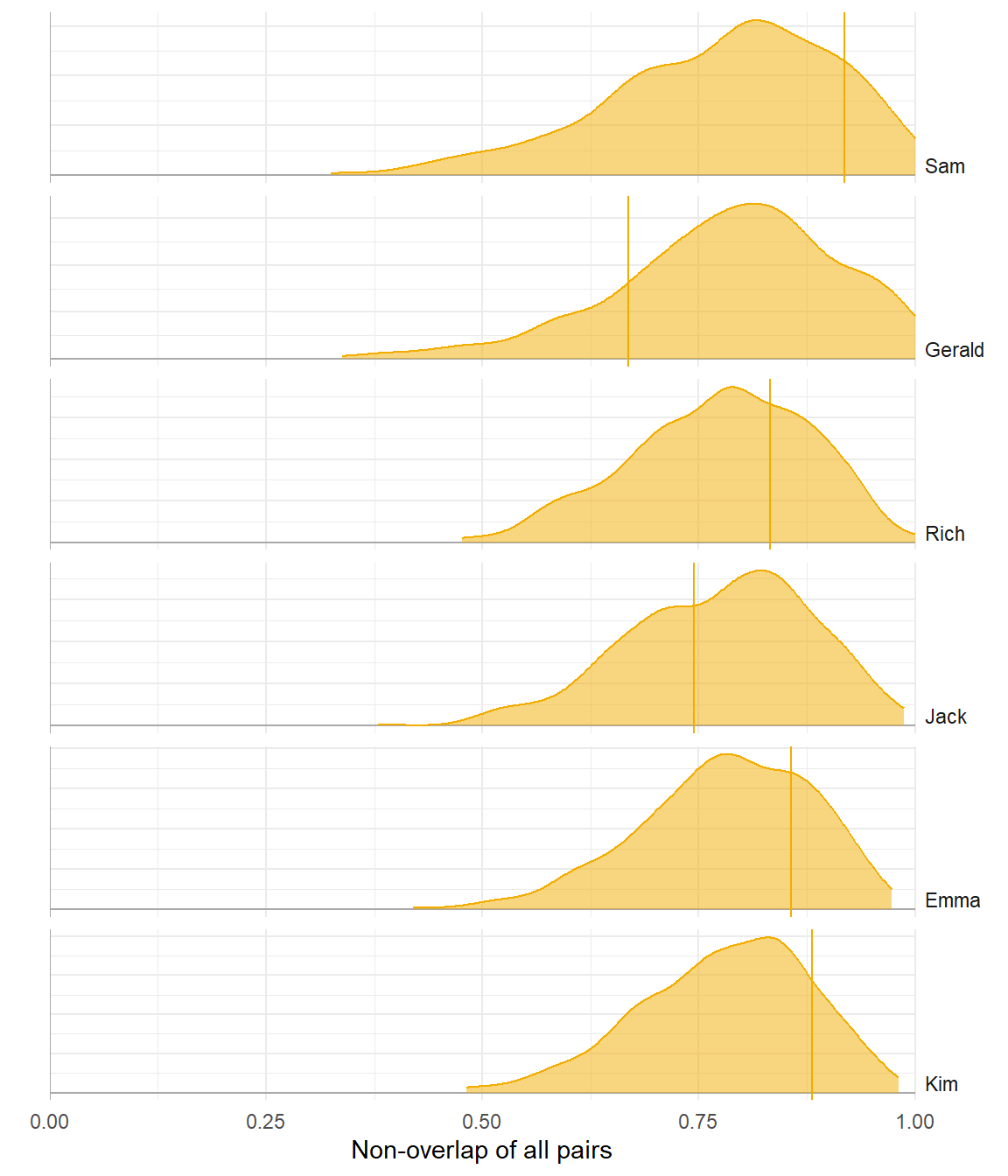

(Non-)Overlap

Poor model

Better model

Auto-correlation

Poor model

Better model

Predicting with new cases

Examples so far are all about predicting possible data for the original participants.

With hierarchical models, we can also predict possible data for new participants.

- In meta-analytic models, we can predict possible data for new studies with new participants.

Baseline summary statistics for new participants

Counterfactual/hypothetical predictions

- Predictive checks can be generated for hypothetical scenarios such as different study designs with different numbers of participants.

Limitations

Predictive checking methods are limited to parametric statistical models.

I have demonstrated posterior predictive checking using Bayesian methods.

- Predictive checks for frequentist/likelihood-based estimation methods are possible but not quite as streamlined.

Open questions

Which summary statistics are generally useful?

How to do raw data graphical checks for meta-analytic models?

How to make the computations easier and more feasible?

How to share predictive checks as part of a study report?

Summary

Predictive checks are a useful and accessible way to evaluate the credibility of a parametric statistical model.

Judgments can be informed by context and subject-matter expertise.

Interpretation focuses on observable data, not unobservable parameters.

A potential bridge between statistical and visual analysis.

Next time you need to evaluate a statistical model of single-case data, ask

Can we look at some predictive checks?

References