Publication bias—or more generally, outcome reporting bias or dissemination bias—is recognized as a critical threat to the validity of findings from research syntheses. In the areas with which I am most familiar (education and psychology), it has become more or less a requirement for research synthesis projects to conduct analyses to detect the presence of systematic outcome reporting biases. Some analyses go further by trying correct for its distorting effects on average effect size estimates. Widely known analytic techniques for doing so include Begg and Mazumdar’s rank-correlation test, the Trim-and-Fill technique proposed by Duval and Tweedie, and Egger regression (in its many variants). Another class of methods involves selection models (or weight function models), as proposed by Hedges and Vevea, Vevea and Woods, and others. As far as I can tell, selection models are well known among methodologists but very seldom applied due to their complexity and lack of ready-to-use software (though an R package has recently become available). More recent proposals include the PET-PEESE technique introduced by Stanley and Doucouliagos; Simonsohn, Nelson, and Simmon’s p-curve technique; Van Assen, Van Aert, and Wichert’s p-uniform, and others. The list of techniques grows by the day.

Among these methods, Egger regression, PET, and PEESE are superficially quite appealing due to their simplicity. These methods each involve estimating a fairly simple meta-regression model, using as the covariate the sampling variance of the effect size or some transformation thereof. PET uses the standard error of the effect size as the regressor; PEESE uses the sampling variance (i.e., the squared standard error); PET-PEESE involves first testing whether the PET estimate is statistically significant, using PEESE if it is or PET otherwise. The intercept from one of these regressions is the average effect size estimate from a study with zero sampling variance; the estimated intercept is used as a “bias-corrected” estimator of the population average effect. These methods are also appealing due to their extensibility. Because they are just meta-regressions, it is comparatively easy to extend them to meta-regression models that control for further covariates, to use robust variance estimation to account for dependencies among effect size estimates, etc.

In a recent blog post, Uri Simonsohn reports some simulation evidence indicating that the PET-PEESE estimator can have large biases under certain conditions, even in the absence of publication bias. The simulations are based on standardized mean differences from two-group experiments and involve simulating collections of studies that include many with small sample sizes, as might be found in certain areas of psychology. On the basis of these performance assessments, he argues that this purported cure is actually worse than the disease—that PET-PEESE should not be used in meta-analyses of psychological research because it performs too poorly to be trusted. In a response to Uri’s post, Joe Hilgard suggests that some simple modifications to the method can improve its performance. Specifically, Joe suggests using a function of sample size as the covariate (in place of the standard error or sampling variance of

In this post, I follow up Joe’s suggestions while replicating and expanding upon Uri’s simulations, to try and provide a fuller picture of the relative performance of these estimators. In brief, the simulations show that:

- Tests for small-sample bias that use PET or PEESE can have wildly incorrect type-I error rates in the absence of publication bias. Don’t use them.

- The sample-size variants of PET and PEESE do maintain the correct type-I error rates in the absence of publication bias.

- The sample-size variants of PET and PEESE are exactly unbiased in the absence of publication bias.

- However, these adjusted estimators still have a cost, being less precise than the conventional fixed-effect estimator.

- In the presence of varying degrees of publication bias, none of the estimators consistently out-perform the others. If you really really need to use a regression-based correction, the sample-size variant of PEESE seems like it might be a reasonable default method, but it’s still really pretty rough.

Why use sample size?

To see why it makes sense to use a function of sample size as the covariate for PET-PEESE analyses, rather than using the standard error of the effect size estimate, let’s look at the formulas. Say that we have a standardized mean difference estimate from a two-group design (without covariates) with sample sizes

where

where

In PET-PEESE analysis,

Consequently, it makes sense to use only the first term of

The second odd thing is that

This second problem with regression tests for publication bias has been recognized for a while in the literature (e.g., Macaskill, Walter, & Irwig, 2001; Peters et al., 2006; Moreno et al., 2009), but most of the work here has focused on other effect size measures, like odds ratios, that are relevant in clinical medicine. The behavior of these estimators might well differ for

Below I’ll investigate how this stuff works with standardized mean differences, which haven’t been studied as extensively as odds ratios. Actually, I know of only two simulation studies that examined the performance of PET-PEESE methods with standardized mean difference estimates: Inzlicht, Gervais, and Berkman (2015) and Stanley (2017). (Know of others? Leave a comment!) Neither considered using sample-size variants of PET-PEESE. The only source I know of that did consider this is this blog post from Will Gervais, which starts out optimistic about the sample-size variants but ends on a discouraged note. The simulations below build upon Will’s work, as well as Uri’s, by 1) considering a more extensive set of data-generating processes and 2) examining accuracy in addition to bias.

Simulation model

The simulations are based on the following data-generating model, which closely follows the structure that Uri used:

Per-cell sample size is generated as

True effects are simulated as

Standardized mean difference effect size estimates are generated as in a two-group between-subjects experiment with equal per-cell sample sizes. I do this by taking

(That first term is Hedges’

Observed effects are filtered based on statistical significance. Let

Each meta-analysis includes a total of

For each simulated meta-sample, I calculated the following:

- the usual fixed-effect meta-analytic average (I skipped random effects for simplicity);

- the PET estimator (including intercept and slope);

- the PEESE estimator (including intercept and slope);

- PET-PEESE, which is equal to the PEESE intercept if

- the modified PET estimator, which I’ll call “SPET” for “sample-size PET” (suggestions for better names welcome);

- the modified PEESE estimator, which I’ll call “SPEESE”; and

- SPET-SPEESE, which follows the same conditional logic as PET-PEESE.

Simulation results are summarized across 4000 replications. The R code for all this lives here. Complete numerical results live here. Code for creating the graphs below lives here.

Results

False-positive rates for publication bias detection

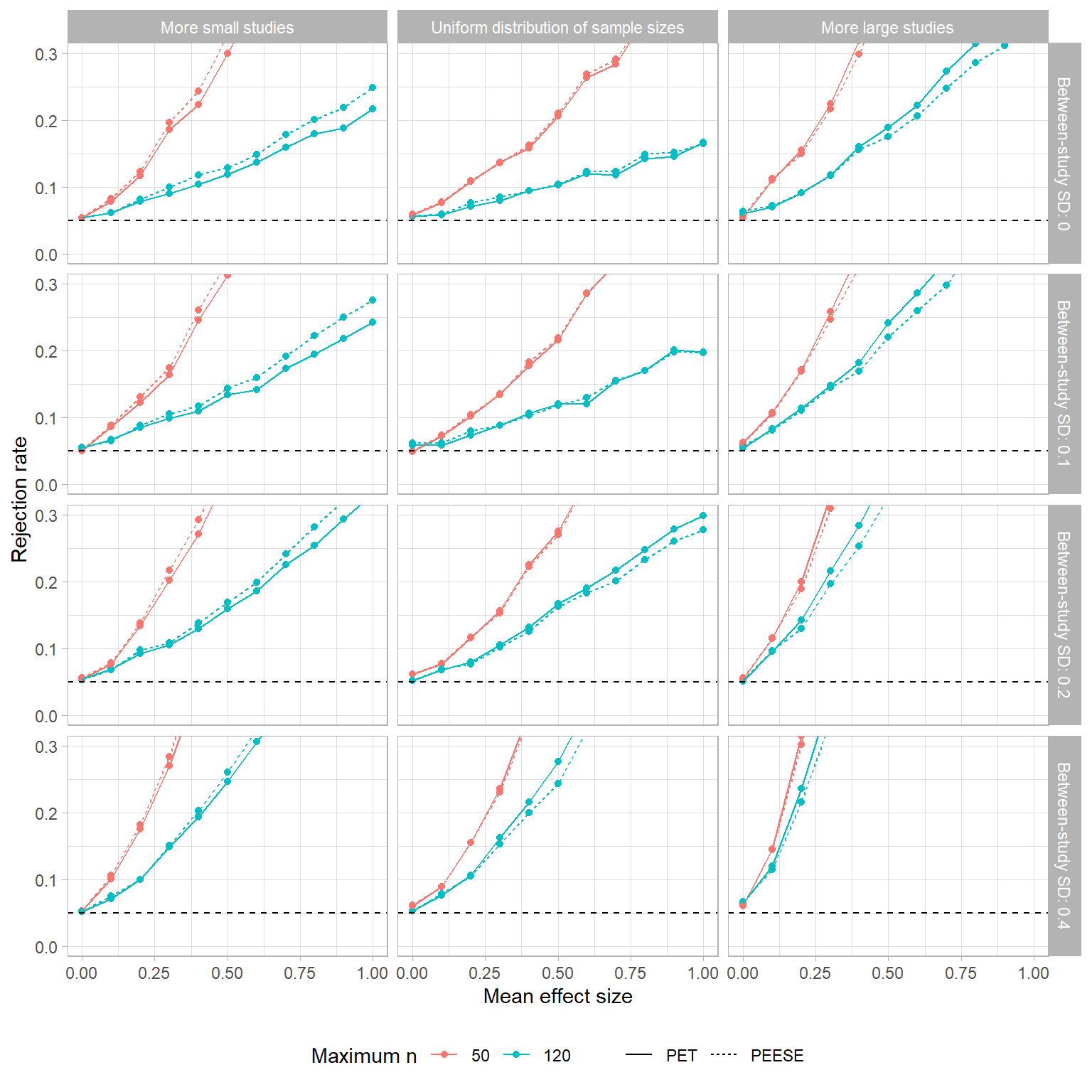

First, let’s consider the performance of PET and PEESE as tests for detecting publication bias. Here, a statistically significant estimate for the coefficient on the SE (for PET) or on

The figure below depicts the Type-I error rates of the PET and PEESE tests when

Both tests are horribly mis-calibrated, tending to reject the null hypothesis far more often than they should. This happens because there is a non-zero correlation between

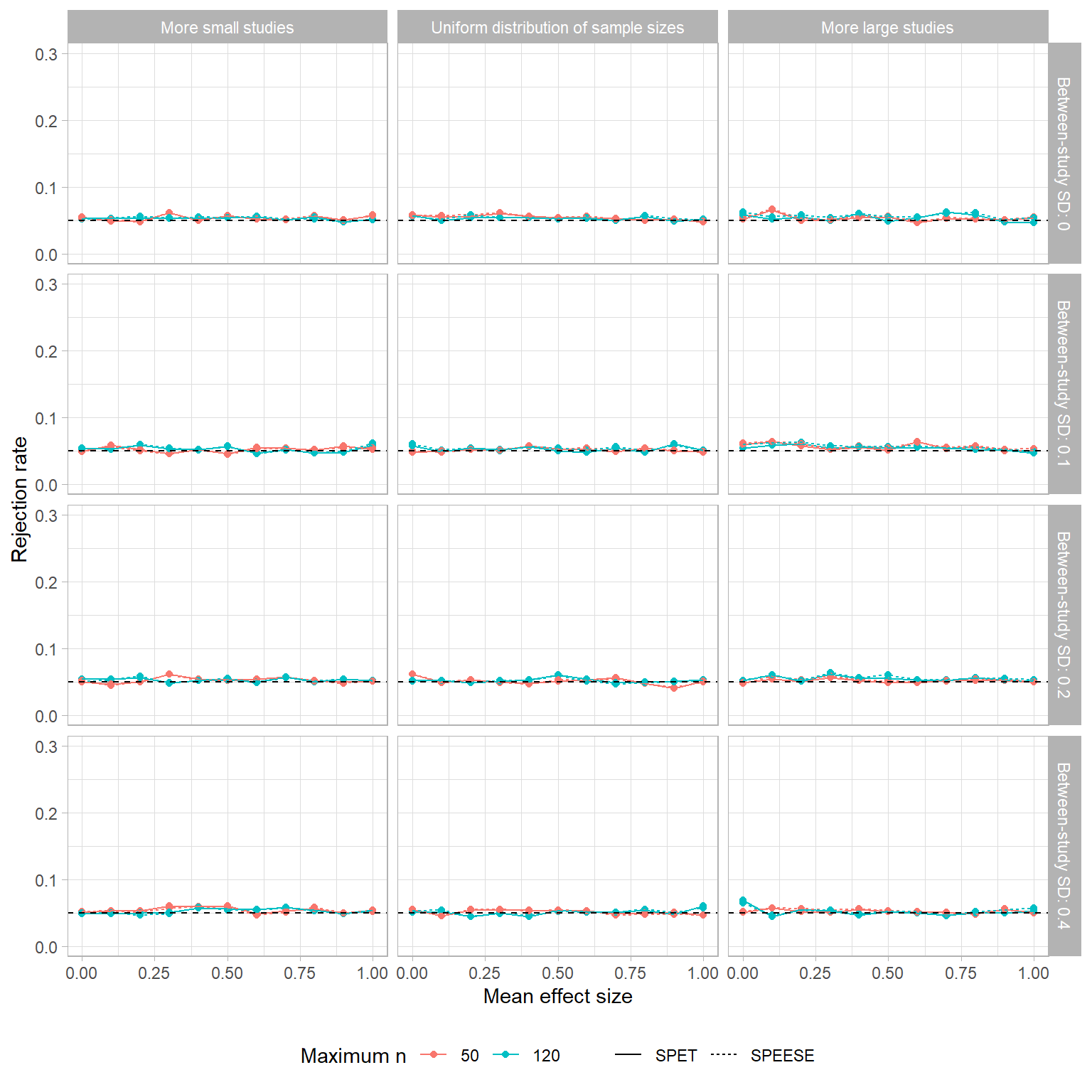

Here’s the same graph, but using the SPET and SPEESE estimators:

Yes, this may be the World’s Most Boring Figure, but it does make clear that both the SPET and SPEESE tests maintain the correct Type-I error rate. (Any variation in rejection rates is just Monte Carlo error.) Thus, it seems pretty clear that if we want to test for small-sample bias, SPET or SPEESE should be used rather than PET or PEESE.

Bias of bias-corrected estimators

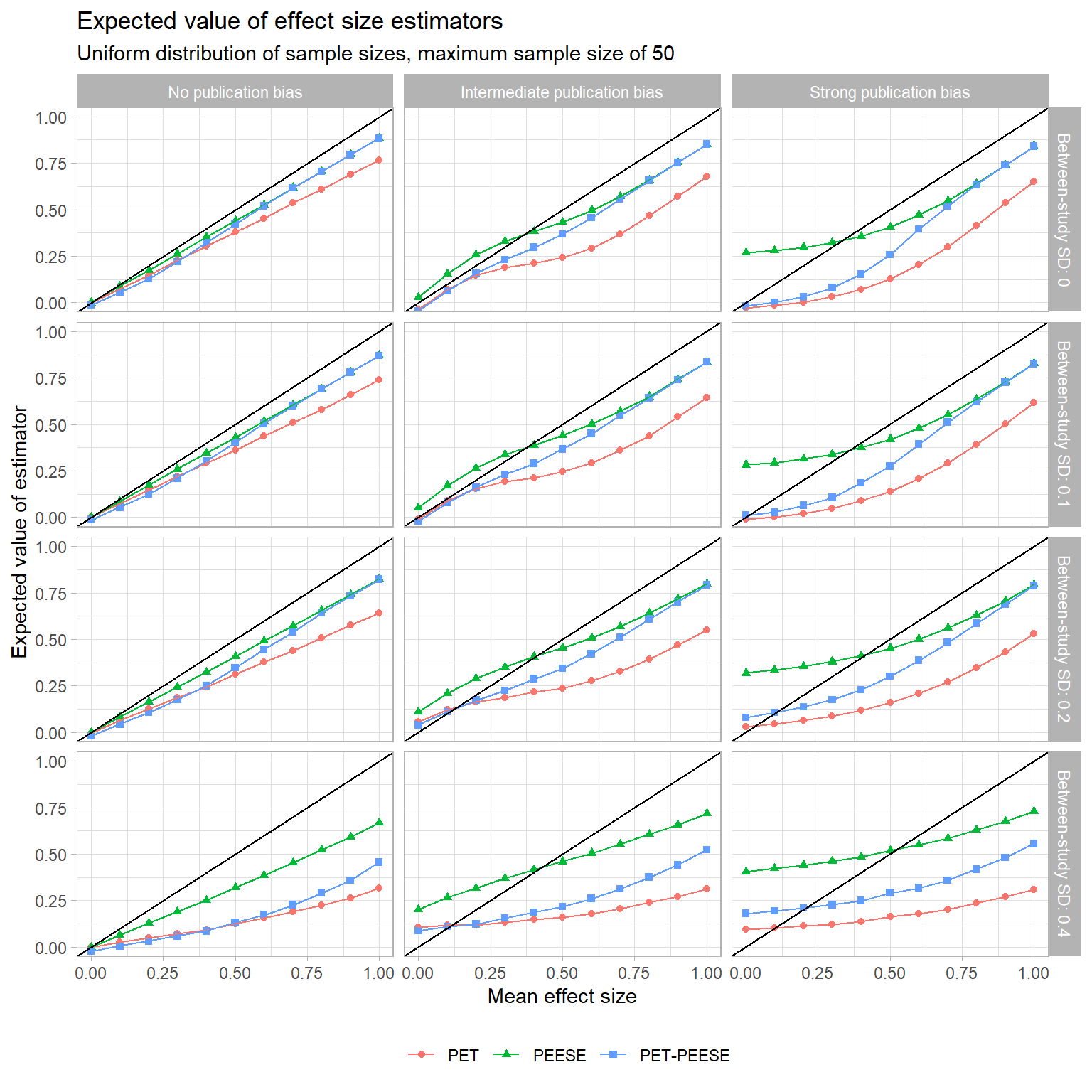

Now let’s consider the performance of these methods as estimators of the population mean effect. Uri’s analysis focused on the bias of the estimators, meaning the difference between the average value of the estimator (across repeated samples) and the true parameter. The plot below depicts the expected level of PET, PEESE, and PET-PEESE as a function of the true mean effect, using the uniform distribution of studies and a maximum sample size of

All three of these estimators are pretty bad in terms of bias. In the absence of publication bias, they consistently under-estimate the true mean effect. With intermediate or strong publication bias, PET and PET-PEESE have a consistent downward bias. As an unconditional estimator, PEESE tends to have a positive bias when the true effect is small, but this decreases and becomes negative as the true effect increases. For all three estimators, bias increases as the degree of heterogeneity increases.

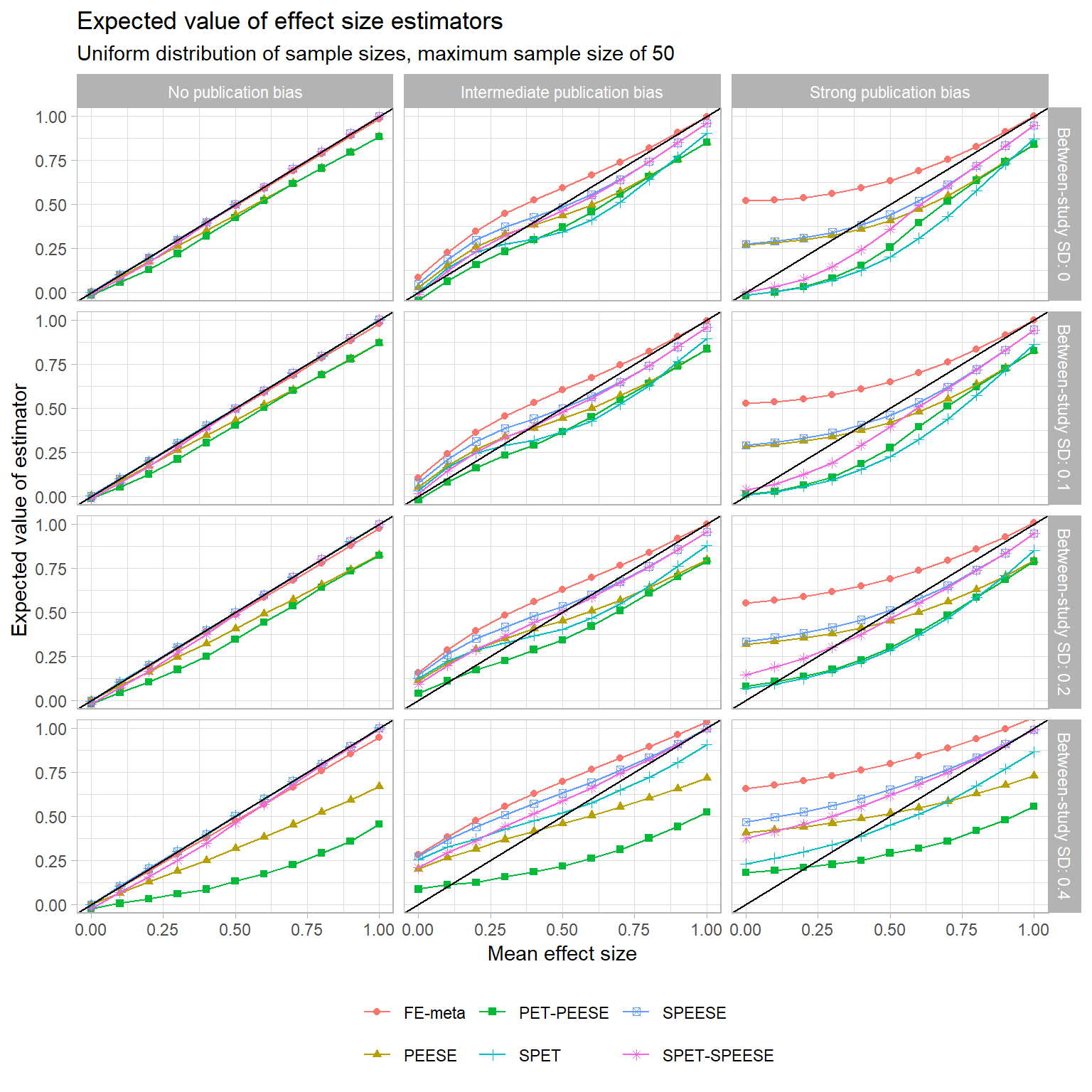

Here is how these estimators compare to the modified SPET, SPEESE, and SPET-SPEESE estimators, as well as to the usual fixed-effect average with no correction for publication bias:

In the left column, we see that SPET and SPEESE are exactly unbiased (and SPET-SPEESE is nearly so) in the absence of selective publication. So is regular old fixed effect meta-analysis, of course. In the middle and right columns, studies are selected based partially or fully on statistical significance, and things get messy. Overall, there’s no consistent winner between PEESE versus SPEESE. At small or moderate levels of between-study heterogeneity, and when the true mean effect is small, PEESE, SPEESE, and SPET-SPEESE have fairly similar biases, but PEESE appears to have a slight edge. This seems to me to be nothing but a fortuitous accident, in that the bias induced by the correlation between

These trends seem mostly to hold for the other sample size distributions I examined too, although the biases of PEESE and PET-PEESE aren’t as severe when the maximum sample size is larger. You can see for yourself here:

Accuracy of bias-corrected estimators

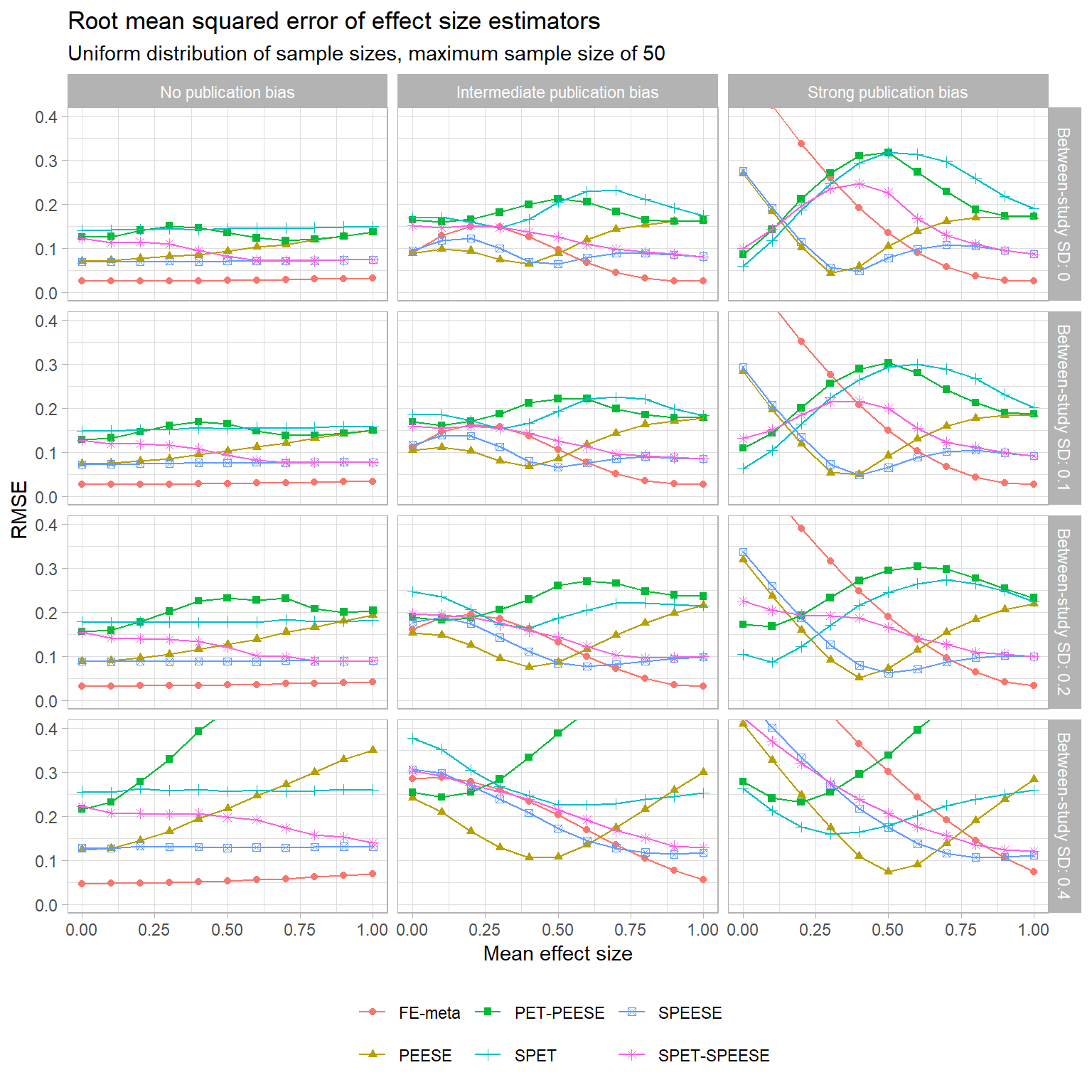

Bias isn’t everything, of course. Now let’s look at the overall accuracy of these estimators, as measured by root mean squared error (RMSE). RMSE is a function of both bias and sampling variance, and so is one way to weigh an estimator that is biased but fairly precise against an estimator that is perfectly unbiased but noisy. The following chart plots the RMSE of all of the estimators (following the same layout as above, just with a different vertical axis):

Starting in the left column where there’s no selective publication, we can see that the normal fixed-effect average has the smallest RMSE (and so is most accurate). The next most accurate is SPEESE, which uniformly beats out PEESE, PET-PEESE, SPET, and SPET-SPEESE. It’s worth noting, though, that there is a fairly large penalty for using SPEESE when it is unnecessary: even with a quite large sample of 100 studies, SPEESE still has twice the RMSE of the FE estimator.

The middle column shows these estimators’ RMSE when there is an intermediate degree of selective publication. Because of the “fortuitous accident” of how the correlation between

Finally, the right column plots RMSE when there’s strong selective publication, so only statistically significant effects appear. Just as in the middle column, PEESE edges out SPEESE for smaller values of the true mean effect. For very small true effects, both of these estimators are edged out by PET-PEESE and SPET-SPEESE. This only holds over a very small range for the true mean effect though, and for true effects above that range these conditional estimators perform poorly—consistently worse than just using PEESE or SPEESE.

Here are charts for the other sample size distributions:

- Uniform distribution of studies, maximum sample size of 120

- More small studies, maximum sample size of 50

- More small studies, maximum sample size of 120

- More large studies, maximum sample size of 50

- More large studies, maximum sample size of 120

The trends that I’ve noted mostly seem to hold for the other sample size distributions (but correct me if you disagree! I’m getting kind of bleary-eyed at the moment…). One difference worth noting is that when the sample size distribution skews towards having more large studies, the accuracy of the regular fixed-effect estimator improves a bit. At intermediate degrees of selective publication, the fixed-effect estimator is consistently more accurate than SPEESE, and mostly more accurate than PEESE too. With strong selective publication, though, the FE estimator blows up just as before.

Conclusions, caveats, further thoughts

Where does this leave us? The one thing that seems pretty clear is that if the meta-analyst’s goal is to test for potential small-sample bias, then SPET or SPEESE should be used rather than PET or PEESE. Beyond that, we’re in a bit of a morass. None of the estimators consistently out-performs the others across the conditions of the simulation. It’s only under certain conditions that any of the bias-correction methods are more accurate than using the regular FE estimator, and those conditions aren’t easy to identify in a real data analysis because they depend on the degree of publication bias.

Caveats

These findings are also pretty tentative because of the limitations of the simulation conditions examined here. The distribution of sample sizes seems to affect the relative accuracy of the estimators to a certain degree, but I’ve only looked at a limited set of possibilities, and also limited consideration to rather large meta-samples of 100 studies.

Another caveat is that the simulations are based on

A further, quite important caveat is that selective publication is not the only possible explanation for a correlation between effect size and sample sizes. In another recent post, Uri sketches a scenario where investigators choose sample size to achieve adequate power (so following best practice!) for predicted effect sizes. If 1) true effects are heterogeneous and 2) investigators’ predictions are correlated with true effect sizes, then a meta-analysis will have effect size estimates that are correlated with sample size even in the absence of publication bias. A blog post by Richard Morey illustrates another possibility that leads to effect-sample size correlation, in which resource constraints induce negative correlation between sample size and the reliability of the outcome measure.

Hold me hostage

It seems to me that one lesson we can draw from this is that these regression-based corrections are pretty meager as analytic methods. We need to understand the mechanism of selective publication in order to be able to correct for its consequences, but the regression-based corrections don’t provide direct information here (even though their performance depends on it!). I think this speaks to the need for methods that directly model the mechanism, which means turning to selection models and studying the distribution of p-values. Also, without bringing in other pieces of information (like p-values), it seems more or less impossible to tease apart selective publication from other possible explanations for effect-sample size correlation.

If I had to pick one of the regression-based bias-correction method to use in an application—as in, if you handcuffed me to my laptop and threatened to not let me go until I analyzed your effect sizes—then on the basis of these simulation exercises, I think I would probably go with SPEESE as a default, and perhaps also report PEESE, but I wouldn’t bother with any of the others. Even though SPEESE is less accurate than PEESE and some other estimators under certain conditions, on a practical level it seems kind of silly to use different estimators when testing for publication bias versus trying to correct for it. And whatever advantage that regular PEESE has over SPEESE strikes me as kind of like cheating—it relies on an induced correlation between

Even if you chained me to the laptop, I would also definitely include a caution that these estimators should be interpreted more as sensitivity analyses than as bias-corrected estimates of the overall mean effect. This is roughly in line with the conclusions of Inzlicht, Gervais, and Berkman (2015). From their abstract:

Our simulations revealed that not one of the bias-correction techniques revealed itself superior in all conditions, with corrections performing adequately in some situations but inadequately in others. Such a result implies that meta-analysts ought to present a range of possible effect sizes and to consider them all as being possible.

Their conclusion was in reference to PET, PEESE, and PET-PEESE. Unfortunately, the tweaks of SPET and SPEESE don’t clarify the situation.

Outstanding questions

These exercises have left me wondering about a couple of things, which I’ll just mention briefly:

- I haven’t calculated confidence interval coverage levels for these simulations. I should probably add that but need to move on at the moment.

- The ever-popular Trim-and-Fill procedure is based on the assumption that a funnel plot will be symmetric in the absence of publication bias. This assumption won’t hold if there’s correlation between

- Under the model examined here, the bias in PET, PEESE, SPET, and SPEESE comes from the fact that the relevant regression relationships aren’t actually linear under selective publication. I do wonder whether using some more flexible sort of regression model (perhaps including a non-linear term) could reduce bias. The trick would be to find something that’s still constrained enough so that bias improvements aren’t swamped by increased variance.

- Many of the applications that I am familiar with involve syntheses where some studies contribute multiple effect size estimates, which might also be inter-correlated. Very little work has examined how regression corrections like PET-PEESE perform in such settings (the only study I know of is Reed, 2015, which involves a specialized and I think rather unusual data-generating model). For that matter, I don’t know of any work that looks at other publication bias correction methods either. Or what selective publication even means in this setting. Somebody should really work on that.